Introduction to Bayesian Filter

Introduction

Bayesian filter is one of the fundamental approaches to estimate the distribution in a process where there are incoming measurements. It used to be widely used in localization problems in robotics. I had some experience previously in particle filter which is one of the extensions of Bayesian filter. However, when it comes to the math form of the model, I would sometimes forget. Here I am writing this article post to document the basic concept of Bayesian filter and the math related to it.

Data

We have a stream of observation data from time step $1$ to time step $t$:

$$

\{z_1, z_2, \cdots , z_t\}

$$

If possible, we also have a stream of action data from time step $1$ to time step $t$:

$$

\{u_1, u_2, \cdots , u_t\}

$$

Goal

Our goal is given {$z_1, z_2, \cdots , z_t$} and possibly {$u_1, u_2, \cdots , u_t$}, we would like to estimate the distribution of robot state at time step $t$, i.e., calculate the probability or probability density of any possible hidden state $x_t$. The state is hidden to the robot, and it could be discrete or continuous. For example, the state could be {$c_t$} where $c_t$ is the coordinates of robot in the map, or {$c_t, p_t$} where $p_t$ is the pose (velocity and orientation) of robot in the map.

Statistically, we are predicting a posterior distribution called “Belief”:

$$

\begin{align}

Bel(x_t) &= P(x_t|u_1, z_1, u_2, z_2, \cdots, u_t, z_t) \\

&= P(x_t|z_{1:t}, u_{1:t})

\end{align}

$$

It should be noted that when we predict the state of robot in Bayesian filter, we care more about the distribution of states $Bel$ rather than the probability or probability density of certain single state $Bel(x_t)$.

Models

We have a parameterized sensor model $P(z|x)$ which given the state $x_t$ predicts the distribution of observations at time step $t$.

We also have a parameterized action model $P(x|u,x’)$ which given the state $x_{t-1}$ and action $u_t$ predicts the distribution of state at time step $t$.

The Bayesian filter usually assumes the process is a first-order Markov chain model.

$$

P(z_t|x_{0:t}, z_{1:t}, u_{1:t}) = P(z_t|x_{t})

$$

$$

P(x_t|x_{1:t-1}, z_{1:t-1}, u_{1:t}) = P(x_t|x_{t-1},u_t)

$$

We will use these two assumptions in our math derivations.

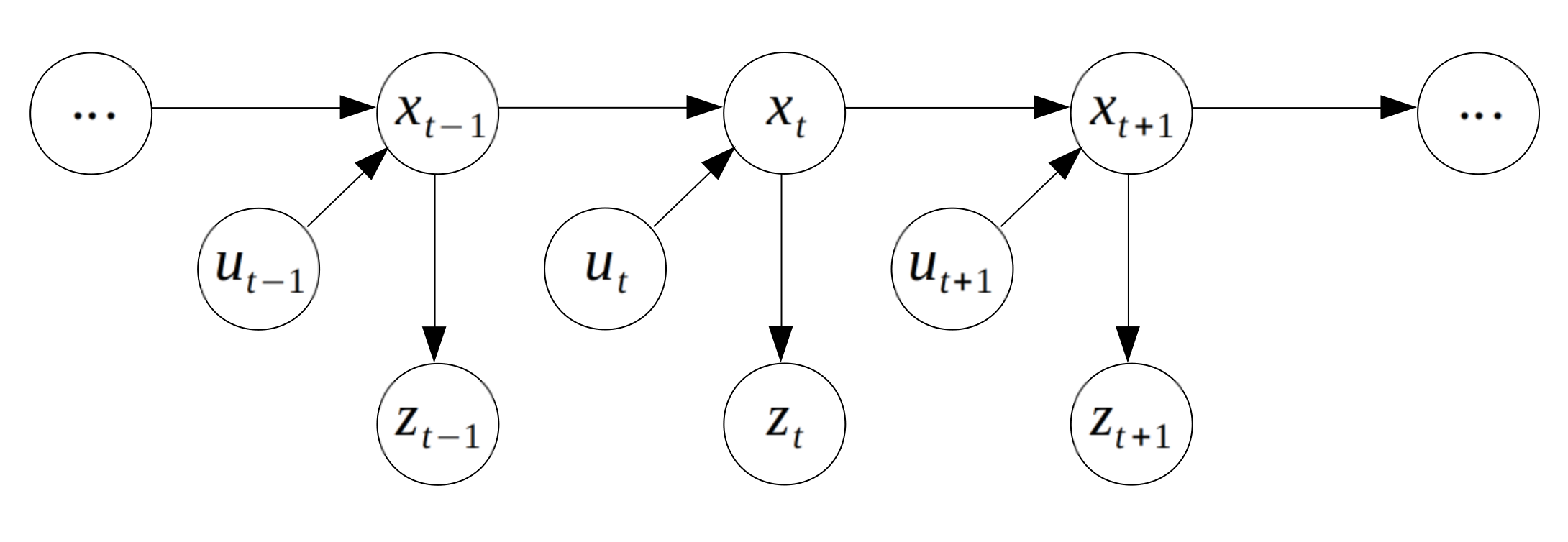

Graph

The whole process with assumptions could be described using a graph known as the Hidden Markov Model (HMM).

Math

Predicted Belief

Predicted belief $\overline{Bel}$ is defined as the probability distribution of state at time step $t$, given the observation and possibly action from time step $1$ to $t-1$. More formally,

$$

\overline{Bel}(x_t) = P(x_t|z_{1:t-1}, u_{1:t})

$$

Using probability chain rule and property of total probability,

$$

\begin{aligned}

\overline{Bel}(x_t) &= P(x_t|z_{1:t-1}, u_{1:t}) \\

&= \int P(x_t|x_{t-1}, z_{1:t-1}, u_{1:t}) P(x_{t-1}|z_{1:t-1}, u_{1:t}) d(x_{t-1}) \\

&= \int P(x_t|x_{t-1}, z_{1:t-1}, u_{1:t}) {Bel}(x_{t-1}) d(x_{t-1})\\

\end{aligned}

$$

Note that $P(x_{t-1}|z_{1:t-1}, u_{1:t}) = {Bel}(x_{t-1})$.

We apply our Markov assumptions,

$$

P(x_t|x_{t-1}, z_{1:t-1}, u_{1:t}) = P(x_t|x_{t-1},u_t)

$$

We then get

$$

\int P(x_t|x_{t-1}, z_{1:t-1}, u_{1:t}) {Bel}(x_{t-1}) d(x_{t-1}) = \int P(x_t|x_{t-1},u_t) {Bel}(x_{t-1}) d(x_{t-1})

$$

Therefore,

$$

\overline{Bel}(x_t) = \int P(x_t|x_{t-1},u_t) {Bel}(x_{t-1}) d(x_{t-1})

$$

Note that $P(x_t|x_{t-1},u_t)$ is calculated our action model at time step as described above.

Belief

$$

\begin{align}

Bel(x_t) &= P(x_t|z_{1:t}, u_{1:t}) \\

&= P(x_t|z_{1:t-1}, z_t, u_{1:t}) \\

\end{align}

$$

We apply Bayes’ theorem

$$

\begin{align}

Bel(x_t) &= P(x_t|z_{1:t-1}, z_t, u_{1:t}) \\

&= \frac{P(z_t|z_{1:t-1}, x_t, u_{1:t}) P(x_t|z_{1:t-1},u_{1:t})}{P(z_t|z_{1:t-1},u_{1:t})} \\

\end{align}

$$

We further apply our Markov assumptions,

$$

P(z_t|z_{1:t-1}, x_t, u_{1:t}) = P(z_t|x_t)

$$

So far we have,

$$

P(x_t|z_{1:t-1}, z_t, u_{1:t}) = \frac{P(z_t|x_t) P(x_t|z_{1:t-1},u_{1:t})}{P(z_t|z_{1:t-1},u_{1:t})}

$$

The denominator $P(z_t|z_{1:t-1},u_{1:t})$ is a normalization term, where

$$

\begin{aligned}

P(z_t|z_{1:t-1},u_{1:t}) &= \int P(z_t|z_{1:t-1}, x_t, u_{1:t}) P(x_t|z_{1:t-1},u_{1:t}) d(x_t) \\

&= \int P(z_t|x_t) P(x_t|z_{1:t-1},u_{1:t}) d(x_t) \\

\end{aligned}

$$

We usually use a constant value to replace the denominator. So we have

$$

Bel(x_t) = \eta P(z_t|x_t) P(x_t|z_{1:t-1},u_{1:t})

$$

We further note that $\overline{Bel}(x_t) = P(x_t|z_{1:t-1},u_{1:t})$, therefore

$$

Bel(x_t) = \eta P(z_t|x_t) \overline{Bel}(x_t)

$$

Note that $P(z_t|x_t)$ is calculated from the sensor model at the time step as we described above.

Algorithm

We are able to calculate all the three components at any time step essentially in Bayesian filter using the mathematical relationships between them we have just figured out.

- Predicted Belief $\overline{Bel}(x_t)$

- Belief $Bel(x_t)$

- Action model $P(x_t|x_{t-1},u_t)$

- Sensor model $P(z_t|x_t)$

Here model means some probability distribution. The parameters of the action model and sensor model are usually fixed and not going to change in Bayesian filter. Making or calibrating the action model and sensor model are often relatively easier and were done before Bayesian filter.

The filter process is basically, at each time step, we calculate the $Bel(x_t)$ and $\overline{Bel}(x_t)$ for each possible states using the math described above, and we update the $Bel(x_t)$ to $\eta P(z_t|x_t) \overline{Bel}(x_t)$ for each possible states. By doing this iteratively, the three distributions might tend to converge.

More formally, the Bayesian filter algorithm could be described as follows:

\begin{algorithm}

\caption{Bayesian Filter Algorithm}

\begin{algorithmic}

\Procedure{Bayesian}{${Bel}, d$}

\State $\eta$ = 0

\If {$d$ is an observation $z_t$} \Comment{Calculate and return Belief given predicted Belief}

\For {all possible state $x_t$}

\State $Bel'(x_t)= P(z_t|x_t){Bel}(x_t)$ \Comment{Calculate Belief}

\State $\eta = \eta + Bel'(x_t)$ \Comment{Calculate margin}

\EndFor

\For {all possible state $x_t$}

\State $Bel'(x_t)= Bel'(x_t) / \eta$ \Comment{Normalize Belief}

\EndFor

\EndIf

\If {$d$ is an action $u_t$} \Comment{Calculate and return predicted Belief given Belief}

\For {all possible state $x_t$}

\State $Bel'(x_t)= \int P(x_t|x_{t-1},u_t) {Bel}(x_{t}) d(x_{t-1})$ \Comment{Normalize Belief}

\EndFor

\EndIf

\Return ${Bel}$\Comment{Return Belief or predicted Belief}

\EndProcedure

\end{algorithmic}

\end{algorithm}

Final Remarks

The Bayesian filter algorithm above described the general process. To do it concretely, there are generally two approaches: Kalman filter and Particle filter. We may talk about these two filters in the future.

References

Introduction to Bayesian Filter

https://leimao.github.io/article/Introduction-to-Bayesian-Filter/