Introduction to Variational Inference

Introduction

Variational inference is a method of approximating a conditional density of latent variables given observed variables. It has also laid the foundation for Bayesian deep learning. There are many kinds of literature and documentation on this topic online. However, I found they missed a lot of details, especially for the derivations. In this blog post, I have documented a full tutorial for variational inference with all the derivation details and a concrete example.

Bayesian Inference

If we have a set of observed random variables $\boldsymbol{x}$ = {$x_1, x_2, \cdots, x_n$} and $\boldsymbol{z}$ = {$z_1, z_2, \cdots, z_m$} latent random variables, we are interested to compute the posterior $p(\boldsymbol{z} | \boldsymbol{x})$.

Using Bayes’ theorem, we have

$$

\begin{align}

p(\boldsymbol{z} | \boldsymbol{x}) &= \frac{p(\boldsymbol{x}, \boldsymbol{z}) }{p(\boldsymbol{x})} \\

&= \frac{p(\boldsymbol{x} | \boldsymbol{z}) p(\boldsymbol{z})}{p(\boldsymbol{x})}

\end{align}

$$

$p(\boldsymbol{x})$ is the marginal density which is also called evidence.

$$

p(\boldsymbol{x}) = \int \limits_{\boldsymbol{z}} p(\boldsymbol{x}, \boldsymbol{z}) d\boldsymbol{z}

$$

However, in most of the models, computing $p(x)$ could be intractable. Here I have given a concrete example below showing that calculating the evidence from a simple model is intractable.

Bayesian Inference Could Be Intractable

Consider a mixture of $K$ unit-variance ($\sigma^2 = 1$) univariate Gaussians with means $\boldsymbol{\mu}$ = { $\mu_1, \mu_2, \cdots, \mu_K $}. The means were independently drawn from a common prior $p(\mu_k)$ which we assume to be a Gaussian $\mathcal{N}(0, \sigma^2)$ (yes, another Gaussian). Here $\mu_k$ is a random variable, prior variance $\sigma^2$ is a hyperparameter, and $\boldsymbol{\mu}$ is a column vector.

To generate $n$ observations {$x_1, x_2, \cdots, x_n$}, for observation $x_i$, we first choose a cluster assignment $c_i$ which indicates which Gaussian distribution is $x_i$ drawn from. Here $c_i$ is a random variable drawn from categorical distribution (yes, one of the simplest expressive distributions) over {$1,2,\cdots,K$}. Without generally, we also assume that it is a even categorical distribution, i.e., the prior $p(c_i) = 1/K$ for all $K$ clusters for all samples. $c_i$ is encoded as a vector of length $K$, where all the elements are zero except the one corresponding to the sampled cluster assignment is one. For example, if $K=5$ and Gaussian mixture #3 was sampled for observation $x_2$, we have $c_2$ = {$0,0,1,0,0$}. The mean of the sampled Gaussian could be expressed as the dot product of $c_i$ and $\mu$, i.e., $x_i | c_i, \boldsymbol{\mu} \sim \mathcal{N}(c_i^{\top} \boldsymbol{\mu}, 1)$

Mathematically, we have the assumed model which contains the prior information

$$

\begin{align}

&\mu_k \sim \mathcal{N}(0, \sigma^2) & k&=1,2,\cdots,K \\

&c_i \sim \text{Categorical}(1/K, \cdots, 1/K) & i&=1,2,\cdots,n \\

&x_i | c_i, \boldsymbol{\mu} \sim \mathcal{N}(c_i^{\top} \boldsymbol{\mu}, 1) & i&=1,2,\cdots,n \\

\end{align}

$$

To calculate the joint probability density of latent variable ($\boldsymbol{\mu}$ = { $\mu_1, \mu_2, \cdots, \mu_K $}, $\boldsymbol{c}$ = { $c_1, c_2, \cdots, c_n $}, note $\boldsymbol{c}$ has $K^n$ possible values) and observation ($\boldsymbol{x}$ = { $x_1, x_2, \cdots, x_n $}), using chain rules, we have

$$

p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}) = p(\boldsymbol{\mu}) \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu})

$$

Note that $\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}$ are all random variables, and latent variables are $\boldsymbol{z}$ = {$\boldsymbol{\mu}, \boldsymbol{c}$}. I should emphasize here again that this is the model (prior) we assumed for the observations. It is not and should not be necessary that the observations were generated from the model we assumed.

Our goal is to calculate the posterior $p(\boldsymbol{z} | \boldsymbol{x})$. Using Bayes’ theorem, we have

$$

\begin{aligned}

p(\boldsymbol{z} | \boldsymbol{x}) &= p(\boldsymbol{\mu}, \boldsymbol{c} | \boldsymbol{x}) \\

&= \frac{p(\boldsymbol{x}| \boldsymbol{\mu}, \boldsymbol{c})p(\boldsymbol{\mu}, \boldsymbol{c})}{p(\boldsymbol{x})} \\

&= \frac{p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x})}{p(\boldsymbol{x})} \\

\end{aligned}

$$

where

$$

\begin{aligned}

p(\boldsymbol{x}) &= \int\limits_{\boldsymbol{\mu}} \int\limits_{\boldsymbol{c}} p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}) d\boldsymbol{c}d\boldsymbol{\mu} \\

&= \int\limits_{\boldsymbol{\mu}} \int\limits_{\boldsymbol{c}} p(\boldsymbol{\mu}) \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) d\boldsymbol{c}d\boldsymbol{\mu}\\

&= \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) \Big[ \int\limits_{\boldsymbol{c}} \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) d\boldsymbol{c} \Big] d\boldsymbol{\mu}\\

&= \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) \Big[ \prod_{i=1}^{n} \sum_{c_i} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) \Big] d\boldsymbol{\mu}\\

\end{aligned}

$$

Since the possible values of random variable $c_i$ is {$0,1,\cdots,K$}, if we expand $\prod_{i=1}^{n} \sum_{c_i} p(c_i)p(x_i |c_i,\boldsymbol{\mu})$, there will be $K^n$ terms, so we will need to $K^n$ independent integrals in order to compute $p(x)$. Therefore, the complexity of computing $p(x)$ is $O(K^n)$ which is intractable.

It should also be noted that to compute $p(x)$ we could also reverse the order of integrals.

$$

\begin{aligned}

p(\boldsymbol{x}) &= \int\limits_{\boldsymbol{c}} \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}) d\boldsymbol{\mu}d\boldsymbol{c} \\

&= \int\limits_{\boldsymbol{c}} \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) d\boldsymbol{\mu}d\boldsymbol{c}\\

&= \int\limits_{\boldsymbol{c}} \Big[ \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) d(\boldsymbol{\mu}) \Big] d\boldsymbol{c}\\

&= \sum_{\boldsymbol{c}} p(\boldsymbol{c}) \Big[ \int\limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) \prod_{i=1}^{n} p(c_i)p(x_i|c_i,\boldsymbol{\mu}) d\boldsymbol{\mu} \Big] \\

\end{aligned}

$$

However, the complexity of computing $p(x)$ is still $O(K^n)$ because there are $K^n$ different configurations of $\boldsymbol{c}$ and we still have to do $K^n$ summations.

Please think twice about the above derivations because sometimes it is brain-twisting.

The conclusion is computing the posterior $p(\boldsymbol{z} | \boldsymbol{x})$ using Bayesian inference is intractable in this case.

Variational Inference

Since directly computing $p(\boldsymbol{z} | \boldsymbol{x})$ might not be feasible, we have to do some approximate inference. Variational inference leverages optimization to find the best distribution among a family of distributions parameterized by free “variational parameters” which approximates the posterior distribution $p(\boldsymbol{z} | \boldsymbol{x})$. The optimization finds the best distribution by finding the best settings of the parameters for the family of distributions. It should be noted that there are other non-optimization based methods to do such approximate inference, such as MCMC. It has advantages and drawbacks compared to variational inference. However, we are not going to elaborate here.

Optimization Goal

In variational inference, we specify a family of distributions $\mathscr{L}$ over the latent random variables. Each $q(\boldsymbol{z}) \in \mathscr{L}$ is a candidate approximation to the posterior. Our goal is to find the best candidate who has the smallest KL divergence to the posterior we want to compute.

Mathematically, our optimization goal is

$$

\DeclareMathOperator*{\argmin}{argmin}

\begin{aligned}

q^{*}(\boldsymbol{z}) &= \argmin_{q(\boldsymbol{z}) \in \mathscr{L}} D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big)

\end{aligned}

$$

where $q^{*}(\cdot)$ is the best approximation to the posterior in distribution family $\mathscr{L}$.

Let us take a look at the KL divergence properties first.

$$

\begin{aligned}

D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big) &= \int\limits_{\boldsymbol{z}} q(\boldsymbol{z}) \log \Big[ \frac{q(\boldsymbol{z})}{p(\boldsymbol{z} | \boldsymbol{x})} \Big] d\boldsymbol{z} \\

&= \int\limits_{\boldsymbol{z}} \Big[ q(\boldsymbol{z}) \log q(\boldsymbol{z}) \Big] d\boldsymbol{z} - \int\limits_{\boldsymbol{z}} \Big[ q(\boldsymbol{z}) \log p(\boldsymbol{z} | \boldsymbol{x}) \Big] d\boldsymbol{z} \\

&= \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log p(\boldsymbol{z} | \boldsymbol{x}) \big] \\

&= \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] - \mathbb{E}_{q} \bigg[ \log \Big[ \frac{p(\boldsymbol{x}, \boldsymbol{z}) }{p(\boldsymbol{x})} \Big] \bigg] \\

&= \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] + \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big]\\

&= \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] + \log p(\boldsymbol{x})\\

\end{aligned}

$$

Note that $\mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] = \log p(\boldsymbol{x})$ because the configuration of $\boldsymbol{z}$ will not affect the prior distribution of $\boldsymbol{x}$.

Because $D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big)$ contains a term $\log p(\boldsymbol{x})$, optimizing against $D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big)$ is not tractable. However, because $\log p(\boldsymbol{x})$ is a constant, so we could just ignore it during optimization.

We define evidence lower bound (ELBO) as

$$

\text{ELBO}(q) = \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big]

$$

This value is called evidence lower bound because it is the lower bound of logarithmic evidence.

$$

\begin{aligned}

\log p(\boldsymbol{x}) &= \text{ELBO}(q) + D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big) \\

&\geq \text{ELBO}(q)

\end{aligned}

$$

Remember that KL divergence is strictly non-negative. There are many ways to prove this and you can find a lot of simple proofs online.

Minimizing $D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big)$ is equivalent to maximizing $\text{ELBO}(q)$.

$$

\DeclareMathOperator*{\argmin}{argmin}

\DeclareMathOperator*{\argmax}{argmax}

\begin{aligned}

q^{*}(\boldsymbol{z}) &= \argmin_{q(\boldsymbol{z}) \in \mathscr{L}} D_{\text{KL}} \big( q(\boldsymbol{z}) || p(\boldsymbol{z} | \boldsymbol{x}) \big) \\

&= \argmax_{q(\boldsymbol{z}) \in \mathscr{L}} \text{ELBO(q)} \\

&= \argmax_{q(\boldsymbol{z}) \in \mathscr{L}} \Big\{ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \Big\}

\end{aligned}

$$

Mean-Field Variational Family

Before we start to do optimization, the variational family has to be chosen. One of the most generic variational family is mean-field.

$$

q(\boldsymbol{z}) = \prod_{j=1}^{m} q_j(z_j)

$$

Each latent variable $z_j$ is governed by its own variational factors, the distribution $q_j(z_j)$. That is to say, each latent variable is sampled from its corresponding distribution from the same variational family. All the distributions were independent of each other and we have to optimize each of them. The final optimal distribution which approximates the posterior $p(\boldsymbol{z} | \boldsymbol{x})$ is the product density of all the independent distributions.

Taking the previous Bayesian mixture of Gaussians as an example, in that case, using mean-field, we have

$$

\begin{aligned}

q(\boldsymbol{z}) &= q(\boldsymbol{\mu}, \boldsymbol{c}) \\

&= \prod_{k=1}^{K} q(\mu_k;m_k,s_k^2) \prod_{i=1}^{n} q(c_i; \varphi_i)\\

\end{aligned}

$$

The factor $q(\mu_k;m_k,s_k^2)$ is a Gaussian distribution for $\mu_k$ which is the mean of the $k$th Gaussian in the mixture. The factor $q(c_i; \varphi_i)$ is a distribution for $c_i$ which is the assigned cluster ID for the Gaussian for observation $x_i$ sampling. $\varphi_i$, a vector of length $K$, is the probabilities of selecting each Gaussian for observation $x_i$ sampling. In practice, we could only reach a local optimum, and $q(\boldsymbol{\mu}, \boldsymbol{c})$ will be as close to $p(\boldsymbol{\mu}, \boldsymbol{c} | \boldsymbol{x})$ as possible.

One of the drawbacks of mean-field variational approximation is that it could not catch the correlation between the latent variables as we have independence assumptions in the definition.

Coordinate Ascent Optimization

Using mean-field variational approximation, we can decompose the terms in ELBO as

$$

\mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] = \sum_{i=1}^{m} \mathbb{E}_{q_i} \big[ \log q(z_i) \big]

$$

Because $p(\boldsymbol{x}, \boldsymbol{z}) = p(\boldsymbol{x}) \prod_{i=1}^{m} p(z_i | z_{1:(i-1)}, \boldsymbol{x})$ according to the conditional probability chain rule,

$$

\begin{aligned}

\mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] &= \mathbb{E}_{q} \bigg[ \log \big[ p(\boldsymbol{x}) \prod_{i=1}^{m} p(z_j | z_{1:(i-1)}, \boldsymbol{x}) \big] \bigg] \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) + \sum_{i=1}^{m} \log p(z_j | z_{1:(i-1)}, \boldsymbol{x}) \big] \\

&= \log p(\boldsymbol{x}) + \sum_{i=1}^{m} \mathbb{E}_{q} \big[ \log p(z_j | z_{1:(i-1)}, \boldsymbol{x}) \big]

\end{aligned}

$$

Therefore, ELBO could be written as

$$

\begin{aligned}

\text{ELBO}(q) &= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

&= \log p(\boldsymbol{x}) + \sum_{i=1}^{m} \mathbb{E}_{q} \big[ \log p(z_i | z_{1:(i-1)}, \boldsymbol{x}) \big] - \sum_{i=1}^{m} \mathbb{E}_{q_i} \big[ \log q(z_i) \big] \\

&= \log p(\boldsymbol{x}) + \sum_{i=1}^{m} \Big[ \mathbb{E}_{q} \big[ \log p(z_i | z_{1:(i-1)}, \boldsymbol{x}) \big] - \mathbb{E}_{q_i} \big[ \log q(z_i) \big] \Big]

\end{aligned}

$$

We further derive the coordinate ascent update for the distribution $q_j(z_j)$ of a latent variable $z_j$ once a time while keeping all the other distributions fixed. With the respect to $q_j(z_j)$, we put $z_j$ at the end of the variable list, that is to say,

$$

\begin{aligned}

\log p(\boldsymbol{x}, \boldsymbol{z}) &= \log \big[ p(\boldsymbol{x}) p( z_{-j} | \boldsymbol{x}) p( z_{j} | z_{-j}, \boldsymbol{x}) \big] \\

&= \log p(\boldsymbol{x}) + \log p( z_{-j} | \boldsymbol{x}) + \log p( z_{j} | z_{-j}, \boldsymbol{x})

\end{aligned}

$$

We rewrite ELBO respective to $q_j(z_j)$,

$$

\begin{aligned}

& \text{ELBO}(q) \\

&= \text{ELBO}(q_j) \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) + \log p( z_{-j} | \boldsymbol{x}) + \log p( z_{j} | z_{-j}, \boldsymbol{x}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{j} | z_{-j}, \boldsymbol{x}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{j} | z_{-j}, \boldsymbol{x}) \big] \\

&\qquad - \bigg[ \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] + \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \mathbb{E}_{q} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg] \\

&= \int \limits_{\boldsymbol{z}} q(\boldsymbol{z}) \log p(z_j | z_{-j}, \boldsymbol{x}) d \boldsymbol{z} - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{\boldsymbol{z}} q_j(z_j) \big[ q_{-j}(z_{-j}) \log p(z_j | z_{-j}, \boldsymbol{x}) d z_{-j} \big] d z_j - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{z_j} q_j(z_j) \Big[ \int \limits_{z_{-j}} q_{-j}(z_{-j}) \log p(z_j | z_{-j}, \boldsymbol{x}) d z_{-j} \Big] d z_j - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] d z_j - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j \\

&\qquad + \bigg[ \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}) \big] + \mathbb{E}_{q} \big[ \log p( z_{-j} | \boldsymbol{x}) \big] - \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

\end{aligned}

$$

Because the rightmost terms have nothing to do with $q_j(z_j)$, we further have the optimization problem.

$$

\DeclareMathOperator*{\argmax}{argmax}

\begin{aligned}

\argmax_{q_j} \text{ELBO}(q_j) &= \argmax_{q_j} \Big[ \mathbb{E}_{q} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] - \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] \Big] \\

&= \argmax_{q_j} \Big[ \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j \Big]

\end{aligned}

$$

It should be noted that some symbols used above are equivalent, such as $q(z_j) = q_j(z_j)$.

This optimization problem is subject to constrain $\int \limits_{z_j} q_j(z_j)d z_j = 1$.

Using Lagrange multiplier and Calculus for variations, it is equivalent to get the local optimum of the following formula.

$$

F(q_j) = \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j + \lambda \big( \int \limits_{z_j} q_j(z_j)d z_j - 1 \big)

$$

Take the functional derivative of $F(q_j)$,

$$

\begin{aligned}

& \frac{\delta F(q_j)}{\delta q_j} \\

&= \big( 1 \times \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] + q_j(z_j) \times 0 \big) - \big( 1 \times \log q_j(z_j) + q_j(z_j) \times \frac{1}{q_j(z_j)} \big) + \lambda \big( 1 - 0 \big) \\

&= \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] - \log q_j(z_j) -1 + \lambda

\end{aligned}

$$

$$

\frac{d F(q_j)}{d \lambda} = \int \limits_{z_j} q_j(z_j)d z_j - 1

$$

We want $\frac{\delta F(q_j)}{\delta q_j} = 0$,

$$

\log q_j^*(z_j) = \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] -1 + \lambda

$$

$$

\begin{aligned}

q_j^*(z_j) &= \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] -1 + \lambda \big\} \\

&= \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} \times \exp \{ -1 + \lambda \}

\end{aligned}

$$

We also want $\frac{d F(q_j)}{d \lambda} = 0$,

$$

\begin{aligned}

\int \limits_{z_j} q_j^*(z_j)d z_j - 1 &= \exp \{ -1 + \lambda \} \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} d z_j - 1 = 0

\end{aligned}

$$

$$

\exp \{ -1 + \lambda \} = \frac{1}{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} d z_j}

$$

Therefore,

$$

q_j^*(z_j) = \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\}}{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} d z_j}

$$

$$

q_j^*(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\}

$$

Because of the conditional probability,

$$

\begin{aligned}

p(z_j | z_{-j}, \boldsymbol{x}) &= \frac{p(z_j, z_{-j}, \boldsymbol{x})}{p(z_{-j}, \boldsymbol{x})} \\

&= \frac{p(\boldsymbol{z}, \boldsymbol{x})}{p(z_{-j}, \boldsymbol{x})}

\end{aligned}

$$

Because $\frac{p(\boldsymbol{z}, \boldsymbol{x})}{p(z_{-j}, \boldsymbol{x})}$ does not involve $z_j$, we could also equivalently derive

$$

\begin{aligned}

q_j^*(z_j) &= \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\}}{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} d z_j} \\

&= \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) - \log p(z_{-j}, \boldsymbol{x}) \big] \big\}}{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) - \log p(z_{-j}, \boldsymbol{x}) \big] \big\} d z_j} \\

&= \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} / \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_{-j}, \boldsymbol{x}) \big] \big\}}{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} / \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_{-j}, \boldsymbol{x}) \big] \big\} d z_j} \\

&= \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} / \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_{-j}, \boldsymbol{x}) \big] \big\}}{ \bigg[ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} d z_j \bigg] / \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_{-j}, \boldsymbol{x}) \big] \big\}} \\

&= \frac{\exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} }{ \int \limits_{z_j} \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\} d z_j } \\

\end{aligned}

$$

Similarly,

$$

q_j^*(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\}

$$

The above update formulas could also be derived without using Calculus for variations. Similar to how we derived $\text{ELBO}(q_j)$:

$$

\begin{aligned}

& \text{ELBO}(q) \\

&= \text{ELBO}(q_j) \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

&= \int \limits_{\boldsymbol{z}} q(\boldsymbol{z}) \log p(\boldsymbol{z}, \boldsymbol{x}) d \boldsymbol{z} - \bigg[ \mathbb{E}_{q_j} \big[ \log q_j(z_j) \big] + \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j - \bigg[ \sum_{i \neq j}^{} \mathbb{E}_{q_i} \big[ \log q_i(z_i) \big] \bigg]\\

&= \int \limits_{z_j} q_j(z_j) \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j + \text{const}\\

\end{aligned}

$$

We define a new distribution

$$

\log \tilde{p_j}(z_j, \boldsymbol{x}) = \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] + \text{const}

$$

Then

$$

\begin{aligned}

\text{ELBO}(q) &= \text{ELBO}(q_j) \\

&= \int \limits_{z_j} q_j(z_j) \log \tilde{p_j}(z_j, \boldsymbol{x}) d z_j - \int \limits_{z_j} q_j(z_j) \log q_j(z_j) d z_j + \text{const}\\

&= \int \limits_{z_j} q_j(z_j) \log \frac{\tilde{p_j}(z_j, \boldsymbol{x})}{q_j(z_j)}d z_j + \text{const} \\

&= -\text{KL}\big( q_j(z_j) || \tilde{p_j}(z_j, \boldsymbol{x}) \big) + \text{const} \\

\end{aligned}

$$

Because KL divergence is non-negative, when $q_j(z_j) = \tilde{p_j}(z_j, \boldsymbol{x})$, $\text{KL}\big( q_j(z_j) || \tilde{p_j}(z_j, \boldsymbol{x}) \big) = 0$, $\text{ELBO}(q_j)$ is maximized.

$q_j(z_j) = \tilde{p_j}(z_j, \boldsymbol{x})$ is equivalent to

$$

q_j^*(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\}

$$

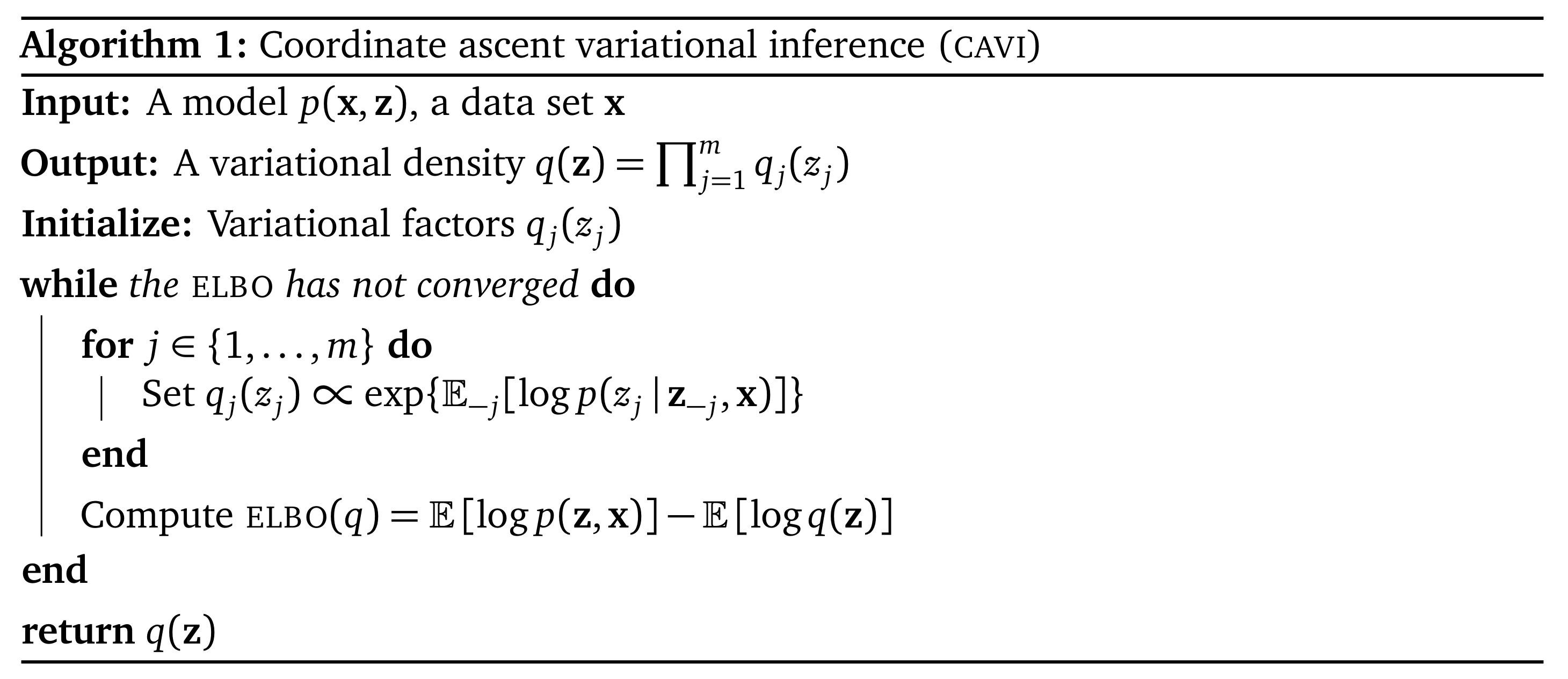

Coordinate Ascent Pseudo-Code for Variational Inference

In practice, when we update parameters of distribution $q_j$ using the expression in the pseudo-code, we may have to normalize it against the sum of exponential terms from all the $m$ latent variables, i.e., the sum is the denominator.

Mean-Field Variational Inference for Mixture of Gaussians

Recall the definition of ELBO is:

$$

\begin{aligned}

\text{ELBO}(q) &= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{z}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{z}) \big] \\

\end{aligned}

$$

To compute the ELBO for the mixture of Gaussians example we have with mean-field assumptions:

$$

\begin{aligned}

& \text{ELBO}(\boldsymbol{m}, \boldsymbol{s}^2, \boldsymbol{\varphi}) \\

&= \mathbb{E}_{q} \big[ \log p(\boldsymbol{x}, \boldsymbol{\mu}, \boldsymbol{c}) \big] - \mathbb{E}_{q} \big[ \log q(\boldsymbol{\mu}, \boldsymbol{c}) \big] \\

&= \mathbb{E}_{q} \Big[ \log \big[ p(\boldsymbol{\mu}, \boldsymbol{c}) p( \boldsymbol{x} | \boldsymbol{\mu}, \boldsymbol{c}) \big] \Big] - \mathbb{E}_{q} \Big[ \log q(\boldsymbol{\mu}, \boldsymbol{c}) \Big]\\

&= \mathbb{E}_{q} \Big[ \log \big[ p(\boldsymbol{\mu}) p(\boldsymbol{c}) p( \boldsymbol{x} | \boldsymbol{\mu}, \boldsymbol{c}) \big] \Big] - \mathbb{E}_{q} \Big[ \log \big[ q(\boldsymbol{\mu}) q(\boldsymbol{c}) \big] \Big]\\

&= \mathbb{E}_{q} \Big[ \log p(\boldsymbol{\mu}) + \log p(\boldsymbol{c}) + \log p( \boldsymbol{x} | \boldsymbol{\mu}, \boldsymbol{c}) \Big] - \mathbb{E}_{q} \Big[ \log q(\boldsymbol{\mu}) + \log q(\boldsymbol{c}) \Big]\\

&= \mathbb{E}_{q} \Big[ \log p(\boldsymbol{\mu}) \Big] + \mathbb{E}_{q} \Big[ \log p(\boldsymbol{c}) \Big] + \mathbb{E}_{q} \Big[ \log p( \boldsymbol{x} | \boldsymbol{\mu} , \boldsymbol{c}) \Big] - \mathbb{E}_{q} \Big[ \log q(\boldsymbol{\mu}) \Big] \\

&\qquad - \mathbb{E}_{q} \Big[ \log q(\boldsymbol{c}) \Big]\\

&= \sum_{k=1}^{K} \mathbb{E}_{q} \Big[ \log p(\mu_k) \Big] + \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log p(c_i) \Big] + \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log p( x_i | \boldsymbol{\mu} , c_i) \Big] \\

&\qquad - \sum_{k=1}^{K} \mathbb{E}_{q} \Big[ \log q(\mu_k) \Big] - \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log q(c_i) \Big]\\

&= \sum_{k=1}^{K} \mathbb{E}_{q} \Big[ \log p(\mu_k) ; m_k, s_k^2 \Big] + \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log p(c_i) ; \varphi_i \Big] \\

&\qquad + \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log p( x_i | \boldsymbol{\mu} , c_i) ; \varphi_i, \boldsymbol{m}, \boldsymbol{s}^2 \Big] - \sum_{k=1}^{K} \mathbb{E}_{q} \Big[ \log q(\mu_k; m_k, s_k^2) \Big] - \sum_{i=1}^{n} \mathbb{E}_{q} \Big[ \log q(c_i; \varphi_i) \Big]\\

\end{aligned}

$$

Each term in the ELBO has a closed form of solution. Note that $\log p(\mu_k)$ and $\log p(c_i)$ are the priors that are independent to the variational family. In our case,

$$

p(\mu_k) = \frac{1}{\sqrt{2\pi \sigma^2}} e^{-\frac{\mu_k^2}{2 \sigma^2}}

$$

$$

p(c_i) = \frac{1}{K}

$$

$$

\begin{aligned}

\mathbb{E}_{q} \Big[ \log q(\mu_k; m_k, s_k^2) \Big] &= \int_{-\infty}^{\infty} q(\mu_k; m_k, s_k^2) \log q(\mu_k; m_k, s_k^2) d \mu_k

\end{aligned}

$$

where

$$

q(\mu_k; m_k, s_k^2) = \frac{1}{\sqrt{2\pi s_k^2}} e^{-\frac{(\mu_k - m_k)^2}{2 s_k^2}}

$$

In addition,

$$

\begin{aligned}

\mathbb{E}_{q} \Big[ \log q(c_i; \varphi_i) \Big] &= \int_{-\infty}^{\infty} q(c_i; \varphi_i) \log q(c_i; \varphi_i) d c_i \\

&= \sum_{k=1}^{K} \varphi_{ik} \log \varphi_{ik} \\

\end{aligned}

$$

Finally,

$$

\begin{aligned}

\mathbb{E}_{q} \Big[ \log p( x_i | \boldsymbol{\mu} , c_i) ; \varphi_i, \boldsymbol{m}, \boldsymbol{s}^2 \Big] &= \int \limits_{c_i} \int \limits_{\boldsymbol{\mu}} p(\boldsymbol{\mu}) p(c_i) \log p( x_i | \boldsymbol{\mu} , c_i) d \boldsymbol{\mu} d c_i \\

\end{aligned}

$$

where

$$

p(\boldsymbol{\mu}) = \Big[ \frac{1}{\sqrt{2\pi \sigma^2}} \Big] ^ K e^{-\frac{\sum_{k=1}^{K} \mu_k^2}{2 \sigma^2}}

$$

$$

p(c_i) = \varphi_{i, \text{max_idx}{(c_i)}}

$$

$$

p( x_i | \boldsymbol{\mu} , c_i) = \frac{1}{\sqrt{2\pi}} e^{-\frac{(x_i - c_i^{\top} \boldsymbol{\mu})^2}{2}}

$$

Please double-check our assumed model with the prior information to confirm the above settings.

So far we found ways to calculate $\text{ELBO}(q)$ in the coordinate ascent algorithm to measure the convergence. Next we are going to see how to do the update for the variational inference family instances. Recall we the following formula to update the parameters in the variational family.

$$

q_j^*(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(\boldsymbol{z}, \boldsymbol{x}) \big] \big\}

$$

In our case, for latent variable $c_i$

$$

\begin{aligned}

q^*(c_i; \varphi_i) &\propto \exp \big\{ \mathbb{E}_{q_{-c_i}} \big[ \log p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}) \big] \big\}

\end{aligned}

$$

$$

\begin{aligned}

& \mathbb{E}_{q_{-c_i}} \Big[ \log p(\boldsymbol{\mu}, \boldsymbol{c}, \boldsymbol{x}) \Big] \\

&= \mathbb{E}_{q_{-c_i}} \Big[ \log \big[ p(\boldsymbol{\mu}) p(\boldsymbol{c}) p(\boldsymbol{x} | \boldsymbol{\mu}, \boldsymbol{c}) \big] \Big] \\

&= \mathbb{E}_{q_{-c_i}} \Big[ \log \big[ p(\boldsymbol{\mu}) p(c_i) p(c_{-i}) p(x_i | \boldsymbol{\mu}, c_i) p(x_{-i} | \boldsymbol{\mu}, c_{-i}) \big] \Big]\\

&= \mathbb{E}_{q_{-c_i}} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big] + \mathbb{E}_{q_{-c_i}} \Big[ \log p(\boldsymbol{\mu}) \Big] + \mathbb{E}_{q_{-c_i}} \Big[ \log p(c_i) \Big] \\

&\qquad + \mathbb{E}_{q_{-c_i}} \Big[ \log \big[ p(c_{-i}) p(x_{-i} | \boldsymbol{\mu}, c_{-i}) \big] \Big] \\

&= \mathbb{E}_{q_{-c_i}} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big] + \mathbb{E}_{q_{-c_i}} \Big[ \log p(\boldsymbol{\mu}) \Big] + \log p(c_i) \\

&\qquad + \mathbb{E}_{q_{-c_i}} \Big[ \log \big[ p(c_{-i}) p(x_{-i} | \boldsymbol{\mu}, c_{-i}) \big] \Big]

\end{aligned}

$$

From the above derivation, we could see that only the following terms $\mathbb{E} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big]$ and $\log p(c_i)$ are dependent on $c_i$, therefore, we have

$$

\begin{aligned}

q^*(c_i; \varphi_i) &\propto \exp \big\{ \mathbb{E}_{q_{-c_i}} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big] + \log p(c_i) \big\}

\end{aligned}

$$

According to the priors,

$$

\log p(c_i) = -\log K

$$

Because

$$

p(x_i | \boldsymbol{\mu}, c_i) = \prod_{k=1}^{K} p(x_i|\mu_k)^{c_{ik}}

$$

We have

$$

\begin{aligned}

\mathbb{E}_{q_{-c_i}} \big[ \log p(x_i | \boldsymbol{\mu}, c_i) \big] &= \sum_{k=1}^{K} c_{ik} \mathbb{E}_{q} \big[ \log p(x_i | \mu_k); m_k, s_k^2 \big] \\

&= \sum_{k=1}^{K} c_{ik} \mathbb{E}_{q} \big[ -(x_i - \mu_k)^2 / 2; m_k, s_k^2 \big] + \text{const} \\

&= \sum_{k=1}^{K} c_{ik} \Big[ \mathbb{E}_{q} \big[ \mu_k; m_k, s_k^2 \big] x_i - \mathbb{E}_{q} \big[ \mu_k^2; m_k, s_k^2 \big]/2 \Big] + \text{const} \\

\end{aligned}

$$

All the terms that are independent to $c_i$ were moved to the const term.

$$

\begin{aligned}

q^*(c_i; \varphi_i) &\propto \exp \big\{ \sum_{k=1}^{K} c_{ik} \Big[ \mathbb{E}_{q} \big[ \mu_k; m_k, s_k^2 \big] x_i - \mathbb{E}_{q} \big[ \mu_k^2; m_k, s_k^2 \big]/2 \Big] \big\}

\end{aligned}

$$

Here

$$

\mathbb{E}_{q} \big[ \mu_k; m_k, s_k^2 \big] = m_k

$$

$$

\begin{aligned}

\mathbb{E}_{q} \big[ \mu_k^2; m_k, s_k^2 \big] &= \mathbb{Var}_{q} \big[ \mu_k; m_k, s_k^2 \big] + \Big[ \mathbb{E}_{q} \big[ \mu_k; m_k, s_k^2 \big] \Big]^2 \\

&= s_k^2 + m_k^2

\end{aligned}

$$

Thus we have

$$

\begin{aligned}

q^*(c_i; \varphi_i) &\propto \exp \big\{ \sum_{k=1}^{K} c_{ik} \Big[ m_k x_i - (s_k^2 + m_k^2)/2 \Big] \big\}

\end{aligned}

$$

However, the above updates were not normalized. We still need a normalizer value to update $\varphi_{ik}$ in practice.

$$

\begin{aligned}

\int\limits_{c_i} \exp \big\{ m_k x_i -(s_k^2 + m_k^2) / 2 \big\} d c_i &= \sum_{c_i=1}^{K} \exp \big\{ \sum_{k=1}^{K} c_{ik} \Big[ m_k x_i - (s_k^2 + m_k^2)/2 \Big] \big\}\\

&= \sum_{k=1}^{K} \exp \big\{ m_k x_i -(s_k^2 + m_k^2) / 2 \big\}

\end{aligned}

$$

Because $q^*(c_i = k; \varphi_i) = \varphi_{ik}$, to update each hyperparameter for $q^{\ast}(c_i)$, for $k = \\{1,2,\cdots,K\\}$

$$

\varphi_{ik} \propto \exp \big\{ m_k x_i -(s_k^2 + m_k^2) / 2 \big\}

$$

With normalizer, we have the final update equation for $\varphi_{ik}$, for $k = \\{1,2,\cdots,K\\}$

$$

\varphi_{ik} = \frac{ \exp \big\{ m_k x_i -(s_k^2 + m_k^2) / 2 \big\} }{ \sum_{k=1}^{K} \exp \big\{ m_k x_i -(s_k^2 + m_k^2) / 2 \big\} }

$$

Similarly, for latent variable $\mu_k$, we have

$$

\begin{aligned}

q^*(\mu_k;m_k,s_k^2) &\propto \exp \big\{ \sum_{i=1}^{n} \mathbb{E}_{q_{-\mu_k}} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big] + \log p(\mu_k) \big\}

\end{aligned}

$$

According to the priors,

$$

\log p(\mu_k) = -\mu_k^2 / 2 \sigma^2

$$

$$

\begin{aligned}

\sum_{i=1}^{n} \mathbb{E}_{q_{-\mu_k}} \Big[ \log p(x_i | \boldsymbol{\mu}, c_i) \Big] &= \sum_{i=1}^{n} \mathbb{E}_{q_{-\mu_k}} \Big[ c_{ik} \log p(x_i | \mu_k) \Big] + \text{const} \\

&= \sum_{i=1}^{n} \mathbb{E}_{q_{-\mu_k}} \Big[ c_{ik} \Big] \mathbb{E}_{q_{-\mu_k}} \Big[ \log p(x_i | \mu_k) \Big] + \text{const} \\

&= \sum_{i=1}^{n} \varphi_{ik} \log p(x_i | \mu_k) + \text{const} \\

&= \sum_{i=1}^{n} \varphi_{ik} \big[-(x_i - \mu_k)^2 / 2 \big] + \text{const} \\

&= \sum_{i=1}^{n} \varphi_{ik} x_i \mu_k - \sum_{i=1}^{n} \varphi_{ik} \mu_k^2 / 2 + \text{const} \\

\end{aligned}

$$

Therefore,

$$

q^*(\mu_k;m_k,s_k^2) \propto \exp \big\{ \big( \sum_{i=1}^{n} \varphi_{ik} x_i \big) \mu_k - 1/2 \big( \sigma^2 + \sum_{i=1}^{n} \varphi_{ik} \big) \mu_k^2 \big\}

$$

Because $q(\mu_k)$ is Gaussian, it is not hard to see that to update the hyperparameters, we have

$$

m_k = \frac{\sum_{i=1}^{n} \varphi_{ik} x_i}{\sigma^2 + \sum_{i=1}^{n} \varphi_{ik}}

$$

$$

s_k^2 = \frac{1}{\sigma^2 + \sum_{i=1}^{n} \varphi_{ik}}

$$

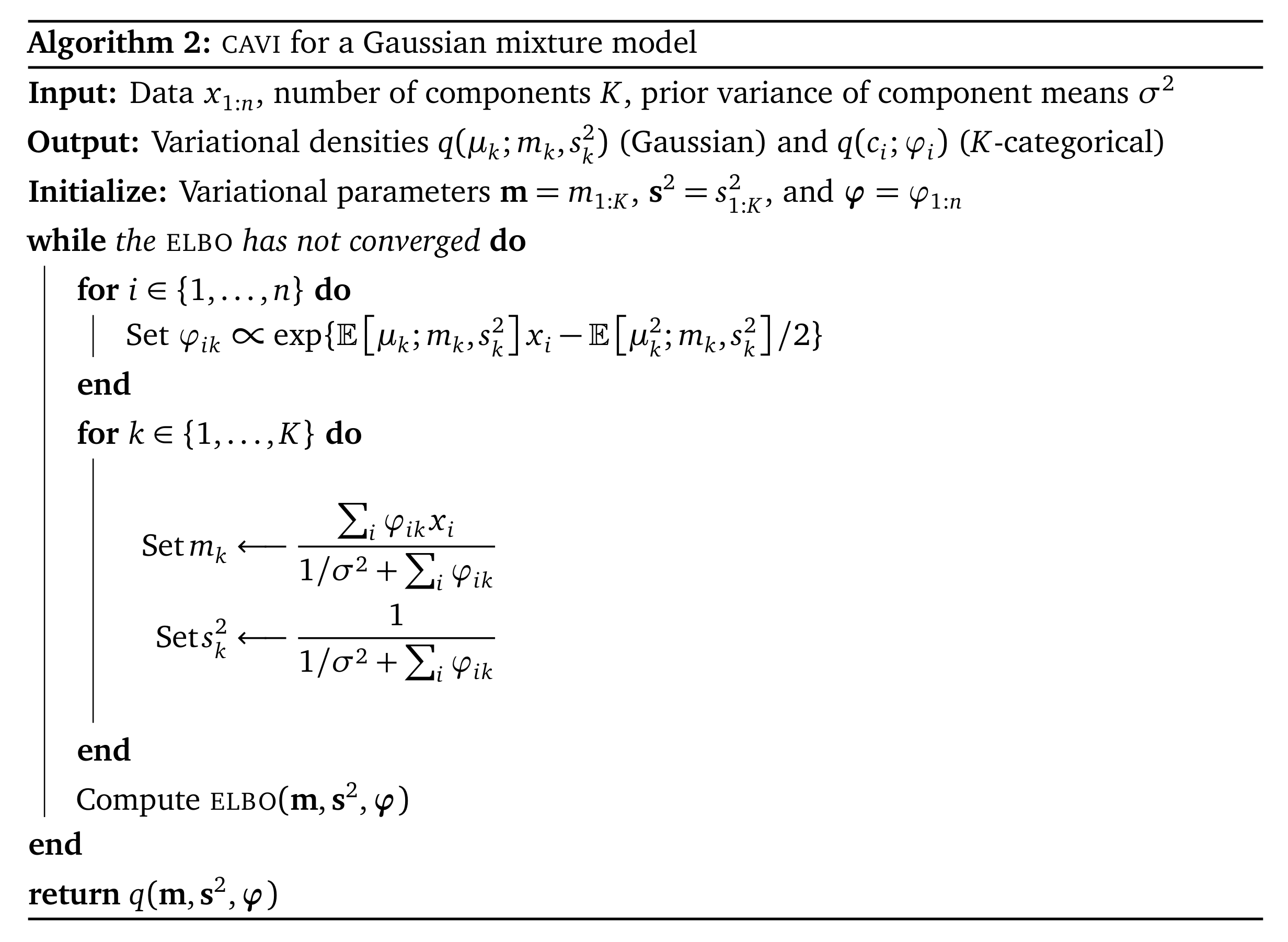

Coordinate Ascent Pseudo-Code for Gaussian Mixture Variational Inference

Variational Inference with Exponential Family

If the posterior distribution and the approximate distribution are all from the exponential family, deriving the update function mathematically will be much easier. This section assumes that you have read my blog post of “Introduction to Exponential Family”.

Suppose each complete conditional is in the exponential family

$$

p(z_j | z_{-j}, \boldsymbol{x}) = h(z_j) \exp \{T(z_j)^{\top} \eta_j(z_{-j}, \boldsymbol{x}) - A(\eta_j(z_{-j}, \boldsymbol{x}))\}

$$

Because

$$

q_j^{\ast}(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\}

$$

We have

$$

\begin{aligned}

q_j^{\ast}(z_j) &\propto \exp \big\{ \mathbb{E}_{q_{-j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}) \big] \big\} \\

&= \exp \bigg\{ \mathbb{E}_{q_{-j}} \Big[ \log \big[ h(z_j) \exp \{T(z_j)^{\top} \eta_j(z_{-j}, \boldsymbol{x}) - A(\eta_j(z_{-j}, \boldsymbol{x}))\} \big] \Big] \bigg\}\\

&= \exp \Big\{ \mathbb{E}_{q_{-j}} \big[ \log h(z_j) + T(z_j)^{\top} \eta_j(z_{-j}, \boldsymbol{x}) - A(\eta_j(z_{-j}, \boldsymbol{x})) \big] \Big\} \\

&= \exp \Big\{ \mathbb{E}_{q_{-j}} \big[ \log h(z_j) \big] + \mathbb{E}_{q_{-j}} \big[ T(z_j)^{\top} \eta_j(z_{-j}, \boldsymbol{x}) \big] - \mathbb{E}_{q_{-j}} \big[ A(\eta_j(z_{-j}, \boldsymbol{x})) \big] \Big\} \\

&\propto \exp \Big\{ \mathbb{E}_{q_{-j}} \big[ \log h(z_j) \big] + \mathbb{E}_{q_{-j}} \big[ T(z_j)^{\top} \eta_j(z_{-j}, \boldsymbol{x}) \big] \Big\} \\

&= \exp \Big\{ \log h(z_j) + T(z_j) ^{\top} \mathbb{E}_{q_{-j}} \big[ \eta_j(z_{-j}, \boldsymbol{x}) \big] \Big\} \\

&= h(z_j) \exp \Big\{T(z_j) ^{\top} \mathbb{E}_{q_{-j}} \big[ \eta_j(z_{-j}, \boldsymbol{x}) \big] \Big\} \\

\end{aligned}

$$

Therefore, $q_j^{\ast}(z_j)$ is in the same family as $p(z_j | z_{-j}, \boldsymbol{x})$, and the natural parameter for $q_j^{\ast}(z_j)$ should be update to the expected value of the natural parameter of the posterior distribution $\mathbb{E}_{q_{-j}} \big[ \eta_j(z_{-j}, \boldsymbol{x}) \big]$.

Let’s further take a look at the conditionally conjugate models. Let $\beta$ be a vector of global latent variables, which potentially govern any of the data. Let $\boldsymbol{z}$ be a vector of local latent variables, whose component only governs the $i$th observation. The joint density is

$$

p(\beta, \boldsymbol{z}, \boldsymbol{x}) = p(\beta) \prod_{i=1}^{n}p(z_i, x_i | \beta)

$$

If we assume $p(z_i, x_i | \beta)$ is in the exponential family.

$$

p(z_i, x_i | \beta) = h(z_i, x_i) \exp \{ T(z_i, x_i)^{\top} \beta - A_l(\beta) \}

$$

I have shown in my “Introduction to Exponential Family” post that one of its conjugate priors from the exponential family must have the natural form

$$

\begin{aligned}

p(\beta) &= h(\beta) \exp \{ T(\beta)^{\top} \alpha - A_g(\alpha) \} \\

&= h(\beta) \exp \Bigg\{ \begin{bmatrix} \beta \\ - A_l(\beta) \end{bmatrix}^{\top} \begin{bmatrix} \alpha_1 \\ \alpha_2 \end{bmatrix} - A_g(\alpha) \Bigg\}

\end{aligned}

$$

Here, $\alpha$ is the natural parameter for $p(\beta)$, $\beta$ and $\alpha_1$ have the same dimensionality, and $\alpha_2$ is a scalar. The test statistics for $T(\beta) = [\beta, -A_l(\beta)]$ which is a column vector.

Then the complete likelihood

$$

\begin{aligned}

p(\boldsymbol{z}, \boldsymbol{x} | \beta) &= \prod_{i=1}^{n} p(z_i, x_i | \beta) \\

&= \prod_{i=1}^{n} \big[ h(z_i, x_i) \exp \{ T(z_i, x_i)^{\top} \beta - A_l(\beta) \} \big] \\

&= \prod_{i=1}^{n} \big[ h(z_i, x_i) \big] \prod_{i=1}^{n} \big[ \exp \{ T(z_i, x_i)^{\top} \beta - A_l(\beta) \} \big] \\

&= h(\boldsymbol{z}, \boldsymbol{x}) \exp \Big\{ \big[\sum_{i=1}^{N} T(z_i, x_i)\big]^{\top} \beta - n A_l(\beta) \Big\}

\end{aligned}

$$

The complete posterior must have the form

$$

\begin{aligned}

p(\beta | \boldsymbol{z}, \boldsymbol{x}) &\propto p(\boldsymbol{z}, \boldsymbol{x} | \beta) p(\beta) \\

&= h(\beta) \exp\{T(\beta)^{\top} \hat{\alpha}\} \\

&= h(\beta) \exp\Bigg\{\begin{bmatrix} \beta \\ - A_l(\beta) \end{bmatrix}^{\top} \begin{bmatrix} \hat{\alpha}_1 \\ \hat{\alpha}_2 \end{bmatrix}\Bigg\} \\

\end{aligned}

$$

where $\hat{\alpha} = [\hat{\alpha}_1, \hat{\alpha}_2]$ is the natural parameter for $p(\beta | \boldsymbol{z}, \boldsymbol{x})$

$$

\hat{\alpha}_1 = \alpha_1 + \big[\sum_{i=1}^{N} T(z_i, x_i)\big]

$$

$$

\hat{\alpha}_2 = \alpha_2 + n

$$

For $p(z_i|\boldsymbol{x}, z_{-i} \beta)$, because of the independent assumption,

$$

p(z_i|\boldsymbol{x}, z_{-i} \beta) = p(z_i | x_i, \beta)

$$

We also assume this is in the exponential family.

$$

\begin{aligned}

p(z_i|\boldsymbol{x}, z_{-i}, \beta) &= p(z_i | x_i, \beta) \\

&= h(z_i) \exp\{ T(z_i)^{\top} \eta(x_i, \beta) - A(\eta(x_i, \beta)) \}

\end{aligned}

$$

Because

$$

q_{z_j}^{\ast}(z_j) \propto \exp \big\{ \mathbb{E}_{q_{-z_j}} \big[ \log p(z_j | z_{-j}, \boldsymbol{x}, \beta) \big] \big\} \\

q_\beta^{\ast}(\beta) \propto \exp \big\{ \mathbb{E}_{q_{-\beta}} \big[ \log p(\beta | \boldsymbol{z}, \boldsymbol{x}) \big] \big\}

$$

We know from the above derivation that the update for the natural parameters for $q_{z_j}^{\ast}(z_j)$ and $q_\beta^{\ast}(\beta)$ respectively.

We assume the natural parameter for $q_{z_j}^{\ast}(z_j)$ and $q_\beta^{\ast}(\beta)$ are $\varphi_i$ and $\lambda$ respectively.

To update $\lambda$,

$$

\begin{aligned}

\lambda &= \mathbb{E}_{q_{-\beta}} \big[ \hat{\alpha} \big] \\

&= \mathbb{E}_{q_{-\beta}} \Bigg[ \begin{bmatrix} \hat{\alpha}_1 \\ \hat{\alpha}_2 \end{bmatrix} \Bigg] \\

&= \mathbb{E}_{q_{-\beta}} \Bigg[ \begin{bmatrix} \alpha_1 + \big[\sum_{i=1}^{N} T(z_i, x_i)\big] \\ \hat\alpha_2 + n \end{bmatrix} \Bigg] \\

&= \begin{bmatrix} \mathbb{E}_{q_{-\beta}} \Big[ \alpha_1 + \big[\sum_{i=1}^{N} T(z_i, x_i)\big] \Big] \\ \mathbb{E}_{q_{-\beta}} [\alpha_2 + n] \end{bmatrix} \\

&= \begin{bmatrix} \alpha_1 + \sum_{i=1}^{N} \mathbb{E}_{q_{-\beta}} \big[T(z_i, x_i)\big] \\ \alpha_2 + n \end{bmatrix} \\

&= \begin{bmatrix} \alpha_1 + \sum_{i=1}^{N} \mathbb{E}_{\varphi} \big[T(z_i, x_i)\big] \\ \alpha_2 + n \end{bmatrix} \\

\end{aligned}

$$

To update $\varphi_i$,

$$

\begin{aligned}

\varphi_i &= \mathbb{E}_{q_{-z_j}} \big[ \eta(x_i, \beta) \big]\\

&= \mathbb{E}_{\lambda} \big[ \eta(x_i, \beta) \big]

\end{aligned}

$$

We will take a look at a concrete example of variational inference with exponential family, which is the Latent Dirichlet Allocation (LDA) model, using updates for natural parameters in one of my upcoming posts.

Final Remarks

There are a lot of missing details in the variational inference documentation and literature. I spent three days going through all the details and fill all the missing holes so that the readers could study the topic much easier.

References

Introduction to Variational Inference

https://leimao.github.io/article/Introduction-to-Variational-Inference/