CUDA Shared Memory Bank

Introduction

Memory bank is a key concept for CUDA shared memory. To get the best performance out of a CUDA kernel implementation, the user will have to pay attention to memory bank access and avoid memory bank access conflicts.

In this blog post, I would like to quickly discuss memory bank for CUDA shared memory.

Memory Bank

Memory Bank Properties

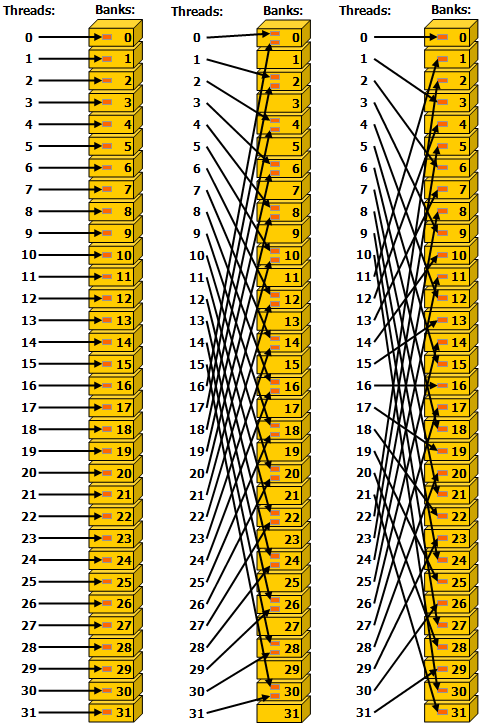

To achieve high memory bandwidth for concurrent accesses, shared memory is divided into equally sized memory modules (banks) that can be accessed simultaneously. Therefore, any memory load or store of $n$ addresses that spans $n$ distinct memory banks can be serviced simultaneously, yielding an effective bandwidth that is $n$ times as high as the bandwidth of a single bank.

However, if multiple addresses of a memory request map to the same memory bank, the accesses are serialized. The hardware splits a memory request that has bank conflicts into as many separate conflict-free requests as necessary, decreasing the effective bandwidth by a factor equal to the number of separate memory requests. The one exception here is when multiple threads in a warp address the same shared memory location, resulting in a broadcast. In this case, multiple broadcasts from different banks are coalesced into a single multicast from the requested shared memory locations to the threads.

Memory Bank Mapping

The memory bank properties were described above. However, how memory addresses map to memory banks is architecture-specific.

On devices of compute capability 5.x or newer, each bank has a bandwidth of 32 bits every clock cycle, and successive 32-bit words are assigned to successive banks. The warp size is 32 threads and the number of banks is also 32, so bank conflicts can occur between any threads in the warp.

To elaborate on this, let’s see how memory addresses map to memory banks using examples. The following program illustrated the idea of 1D and 2D memory address to memory banks mapping for devices of compute capability 5.x or newer.

1 |

|

1 | $ g++ memory_bank.cpp -o memory_bank -std=c++14 |

Memory Bank Conflicts

Notice that for 2D matrix, assuming the data type bitwidth is 32 bit, if $N$ is a multiple of 32, the elements in the same column of the matrix belongs to the same memory bank. This is where memory bank conflicts can easily happen in the implementation. If the threads in a warp try to access the values in the same column of the matrix, there will be severe memory bank conflicts. Using some other values for $N$, such as 33, can avoid the elements in the same column of the matrix belongs to the same memory bank. So be careful about the stride of memory bank access.

1 |

|

In practice, the additional column is unused and can be filled with any value. Just to make sure that the algorithm implemented will not produce incorrect results by accidentally using the values that are not supposed to be used in the additional column.

1 | $ g++ memory_bank.cpp -o memory_bank -std=c++14 |

Here is an example of memory conflicts due to inappropriate strides.

References

CUDA Shared Memory Bank