Nsight Compute In Docker

Introduction

NVIDIA Nsight Compute is an interactive profiler for CUDA that provides detailed performance metrics and API debugging via a user interface and command-line tool. Users can run guided analysis and compare results with a customizable and data-driven user interface, as well as post-process and analyze results in their own workflows.

In this blog post, I would like to discuss how to install and use Nsight Compute in Docker container so that we could use it and its GUI anywhere that has Docker installed.

Nsight Compute

Build Docker Image

It is possible to install Nsight Compute inside a Docker image and used it anywhere. The Dockerfile for building Nsight Compute is as follows.

1 | FROM nvcr.io/nvidia/cuda:13.1.0-devel-ubuntu22.04 |

To build the Docker image, please run the following command.

1 | $ docker build -f nsight-compute.Dockerfile --no-cache --tag nsight-compute:13.1.0 . |

Upload Docker Image

To upload the Docker image, please run the following command.

1 | $ docker tag nsight-compute:13.1.0 leimao/nsight-compute:13.1.0 |

Pull Docker Image

To pull the Docker image, please run the following command.

1 | $ docker pull leimao/nsight-compute:13.1.0 |

Run Docker Container

To run the Docker container, please run the following command.

1 | $ xhost + |

Run Nsight Compute

To run Nsight Compute with GUI, please run the following command.

1 | $ ncu-ui |

We could now profile the applications from the Docker container, from the Docker local host machine via Docker mount, and from the remote host such as a remote workstation or an embedding device.

Examples

Non-Coalesced Memory Access VS Coalesced Memory Access

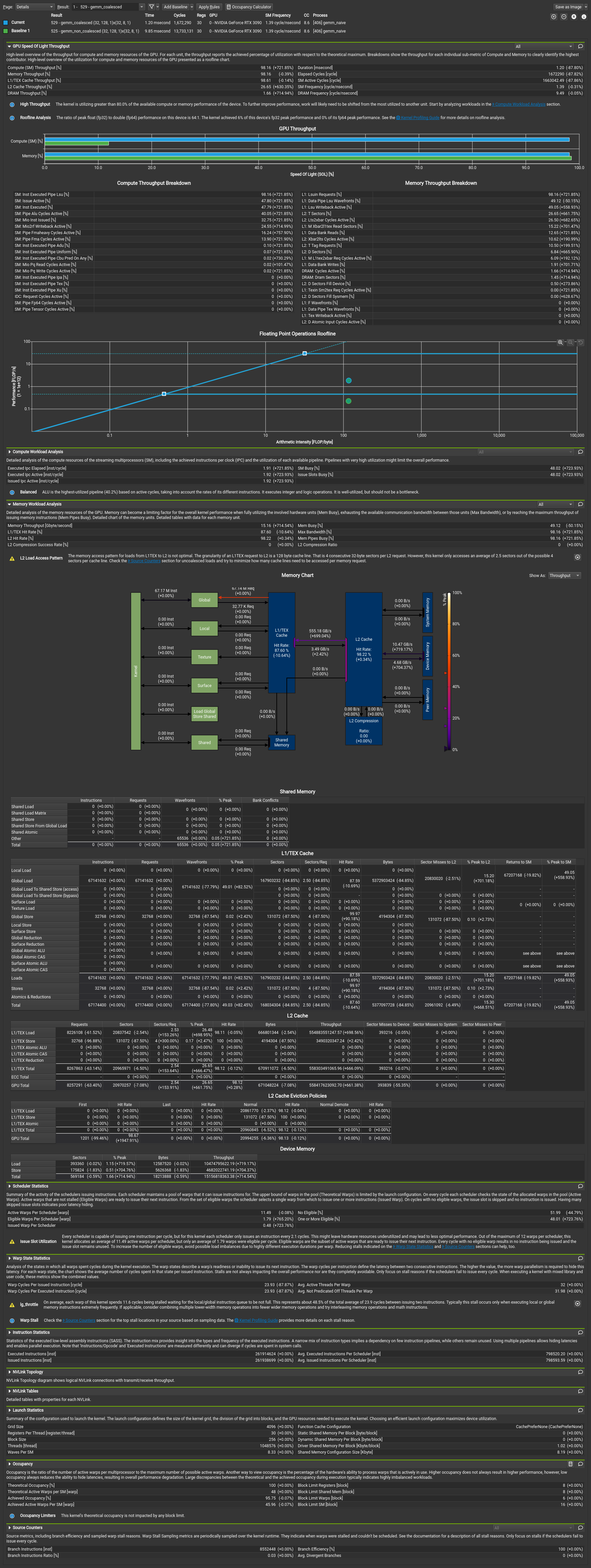

In this example, we implemented a naive GEMM kernel that performs matrix multiplication on the GPU. The kernel is naive because it did not use any advanced techniques such as shared memory tiling. Our original goal was to read and write the global memory in a coalesced manner. However, in the first version of the kernel, gemm_non_coalesced, we created a bug which swapped the row and column indices of the output matrix. Therefore, the kernel read and write the global memory in a non-coalesced manner. In the second version of the kernel, gemm_coalesced, we fixed the bug and read and write the global memory in a coalesced manner. We profiled the two kernels using Nsight Compute and compared the performance difference between the two kernels.

1 |

|

To build the example using nvcc and profile the example using Nsight Compute, please run the following commands.

1 | $ nvcc gemm_naive.cu -o gemm_naive |

To view the profiling results using Nsight Compute GUI, please run the following command.

1 | $ ncu-ui |

From the Nsight Compute profile details, we could see that the first version of the kernel gemm_non_coalesced took 9.85 ms, whereas the second version of the kernel gemm_coalesced took 1.20 ms. Despite the fact that neither of the two kernels is well optimized, Nsight Compute found a lot of issues with the two kernels. Specifically, the kernel gemm_non_coalesced is very memory-bound and Nsight Compute tells us that “This kernel has non-coalesced global accesses resulting in a total of 941359104 excessive sectors (85% of the total 1109393408 sectors)”. For example, for the kernel gemm_non_coalesced, L1/TEX Cache statistics shows that global load takes 16.51 sector per request (to compute a product, each thread in a warp loads from a distinct sector and a warp loads from $32 \times 1$ sectors of the buffer of matrix A per request, each thread in a warp loads from the same sector and a warp loads from $1$ sector of the buffer of matrix B per request, on average $\frac{32 \times 1 + 1}{2} = 16.5$), and global store takes 32 sectors per request ($\frac{32 \times 1}{1} = 32$), whereas for the kernel gemm_coalesced, which fixed the global memory coalesced access issue, global load takes 2.5 sector per request (to compute a product, every $32 / 4$ threads in a warp load from the same sector and a warp loads $32 \times 4 / 32$ sectors of the buffer of matrix A per request, each thread in a warp loads from the same sector and a warp loads from $1$ sector of the buffer of matrix B per request, on average $\frac{32 \times 4 / 32 + 1}{2} = 2.5$), and global store takes 4 sectors per request ($\frac{32 \times 4 / 32}{1} = 4$). The size of a sector is 32 bytes.

GitHub

All the Dockerfiles and examples are available on GitHub.

References

Nsight Compute In Docker