DriveGPT

Introduction

On the 2023 China Haomo 8th AI Day, Haomo announced DriveGPT, an OpenAI GPT based autoregressive Transformer model for autonomous vehicle planning and reasoning using Drive language.

In this blog post, I would like to quickly discuss what Haomo’s DriveGPT and Drive language are.

Autonomous Vehicle Planning

The core software components of an autonomous vehicle are perception, planning, and control. Planning refers to the process of generating action plans given a scene or a sequence of scenes for the autonomous vehicle to follow to achieve safe and desired autonomous driving.

The scenes used for planning are obtained from the perception software component. The planned action will be executed by the control software component.

Planning can also be further divided into three categories, mission planning, behavior planning, and local planning. Mission planning is the highest level planning for the journey. It decides which route to take to reach the destination. For example, the Google Map we commonly used for navigation belongs to this category. Behavior planning is the mid-level planning that decides what high level actions to take given real-time dynamic scenes. It decides whether the vehicle should switch lanes, accelerate, decelerate, turn, stop, etc. Local planning is the low-level planning that achieves behavior planning in a smooth and safe fashion. The boundary between behavior planning and local planning is sometimes ambiguous.

DriveGPT focuses on behavior planning and local planning.

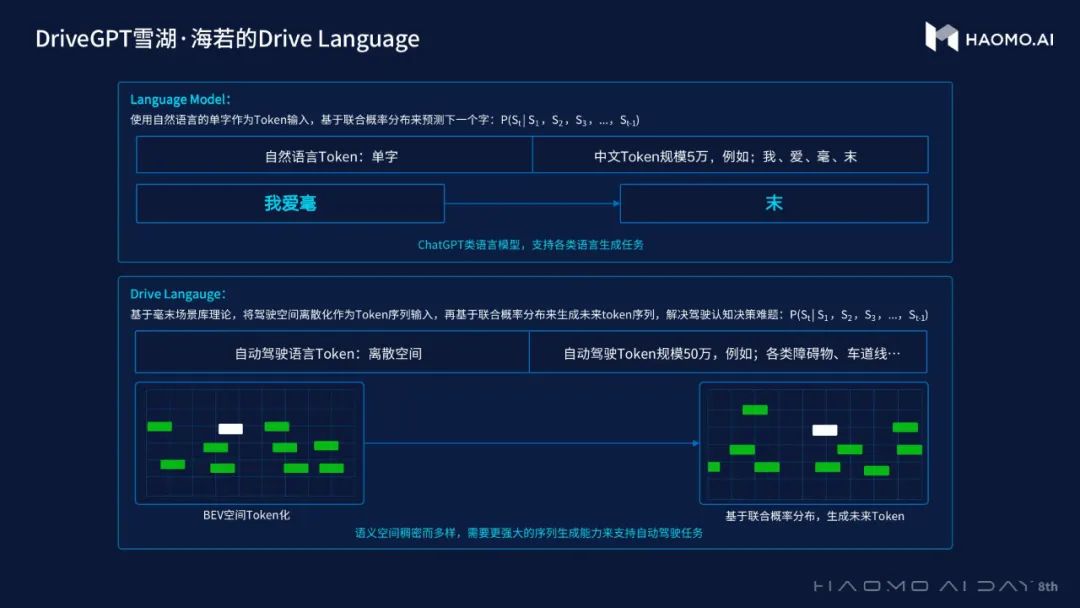

Drive Language

Drive language is a language for describing driving instances. A driving instance can be described using Drive language, i.e., one or more than one Drive sentences. A Drive sentence consists of Drive tokens.

Drive Language Tokens

Because coordinates or real values can be quantized, the perception signal representations, such as object coordinates, object size, lane coordinates, ego car trajectory coordinates, can be quantized. Depending on how to quantize perception signals, the number of tokens we have to create can vary. If we want to have higher resolution for tokenization, the number of tokens we need to create will increase. For example, if we want to use one token to describe the coordinates of a 2D object, the number of tokens required will be a lot in a 2D BEV space. However, if we can use two tokens to describe the $x$ and $y$ coordinates of a 2D object respectively, the number of tokens required can be significantly reduced.

Once the perception signals in one scene are tokenized, they can be used for describing the scene. For example, in a naive setting, in one scene, if there are three cars in locations of certain sizes $x_1$, $x_2$, and $x_3$, four lanes at location and orientation $y_1$, $y_2$, $y_3$, $y_4$, and the ego car location is $z_1$ the Drive sentence might just be, $\text{Token}_{x_1}$, $\text{Token}_{x_2}$, $\text{Token}_{x_3}$, $\text{Token}_{y_1}$, $\text{Token}_{y_2}$, $\text{Token}_{y_3}$, $\text{Token}_{y_4}$, $\text{Token}_{z_1}$. A sequence of Drive sentences describing scenes can also be used for describing a sequence of scenes and ego car behaviors.

A sequence of scenes and ego car behaviors may involve human intention or a sequence of human intentions, such as changing lanes and passing the neighbor car. These human intentions can also be described using token or a sequence of tokens. Depending on the token engineering, the human intention tokens can be tokens from human natural language or specialized tokens. In this way, a sequence of scene description in Drive language might also be accompanied with human intentions in Drive language.

Drive Language Model

Because autonomous driving manufacturers have lots of human driving data, i.e., sequence of scenes, and the scenes are usually well annotated with perception signal labels, ego car behaviors, and human intentions, after tokenizing those, the Drive language, which is backend by the human driving logic, can be learned using a Drive language model.

Because Drive language model, just like other natural language processing models, is a generative model, it can generate future sequence of tokenized scenes and ego car behaviors based on the previous sequence of tokenized scenes and ego car behaviors.

In addition, the human intention annotations mentioned previously are naturally the prompts for language model. After a Drive language model is trained with sufficient amount of tokenized scenes, ego car behaviors, and human intentions, given a human intention prompt, the Drive language model can follow the human intentions and generate future scenes and ego car behaviors, similar to what it has seen from the training data. This is analogous to the OpenAI InstructGPT and ChatGPT which can follow human instructions in the prompt and generate desired contents.

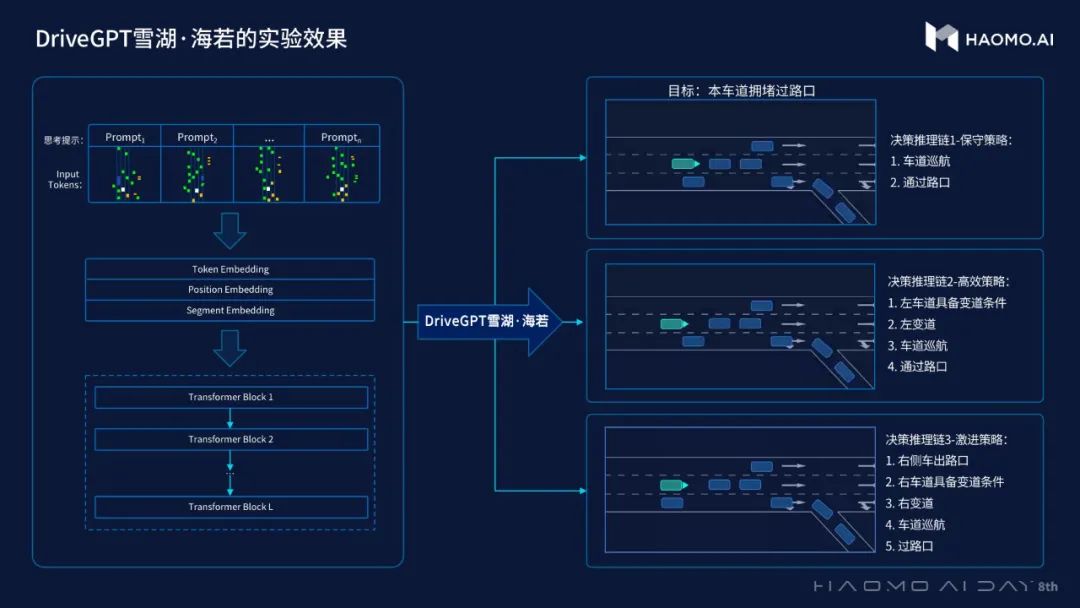

DriveGPT

In terms of the selection of Drive language model, as mentioned by Haomo’s CEO, they previously used the Transformer encoder-decoder architecture, but now they have completely switched to the Transformer decoder-only architecture which was used by the famous OpenAI GPT models. They branded their Transformer decoder-only architectured Drive language model as DriveGPT.

In the figure above, Haomo was pre-training DriveGPT using previous tokenized scenes, ego car behaviors, and human intentions for predicting future tokenized ego car behaviors. But in practice, one can also pre-train DriveGPT for predicting not only future tokenized ego car behaviors but also future tokenized scenes. In this way, DriveGPT can generate an infinity number of Drive instances, which Haomo referred as Drive parallel universes.

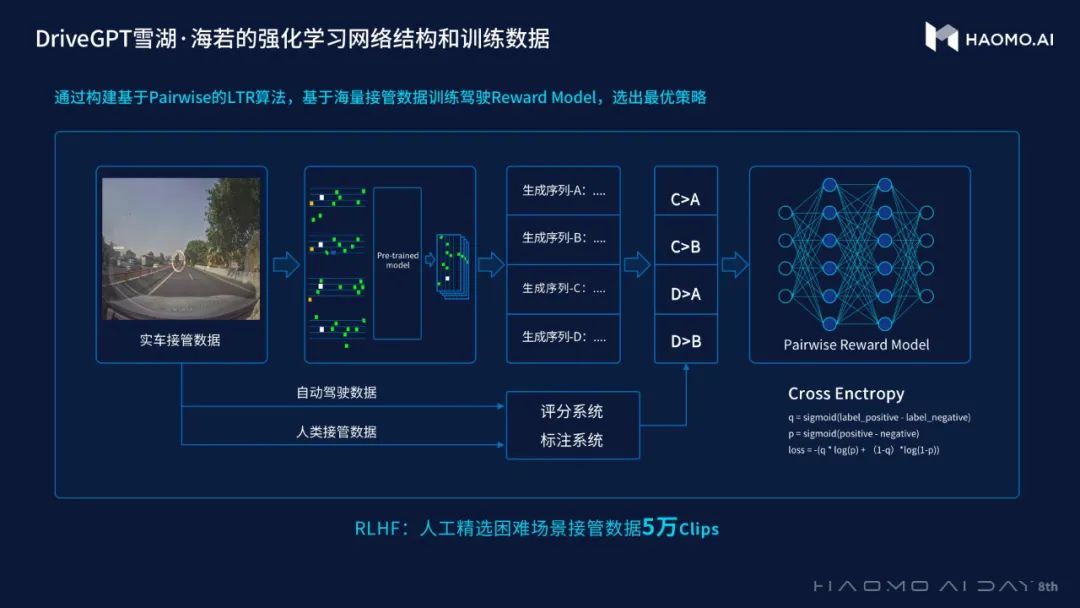

Human Feedback In the Loop

Similar to OpenAI InstructGPT and ChatGPT, DriveGPT training can also have human feedback in the loop. It creates a reward model using the data of human ranking the quality and safety of future tokenized scenes and ego car behaviors, and further finetuning the pre-trained DriveGPT using reinforcement learning and the reward model.

The human feedback in the loop reward model was not only trained on ordinary human driving or autonomous driving data, but also use the autonomous driving data that involves human engagement usually from very difficult-to-drive scenes.

Basically, when the autonomous car is driving, if human choose to take over in some scenes, that means the autonomous driving model is not performing well in those scenes. Using the tokenized scenes and ego car behaviors before human taking over, DriveGPT can generate many tokenized scenes and ego car behaviors in the future. The tokenized scenes and ego car behaviors since human engagement from the real data is also a very important data point for comparison with other generated data from the same previous scenes and ego car behaviors. Usually it is ranked the highest comparing to the DriveGPT generated data for DriveGPT reward model training.

Chain of Thoughts

The reasoning for planning is very critical for safety purposes. Neural networks are usually black boxes. For non-safety applications, we usually don’t care if they are black boxes or not. However, for safety applications that use neural networks for planning and decision, it’s very necessary to know “what neural networks are actually thinking”.

Given previous tokenized scenes, ego car behaviors and human intention prompt, DriveGPT can generate future tokenized scenes and ego car behaviors. If there are tokenized chain of thoughts annotated with the human intention prompt for a sequence of scenes, given a sequence of tokenized scenes and ego car behaviors, the model can also learn to generate the tokenized chain of thoughts, making DriveGPT planning “no longer a black box”. However, I think one might still argue that it’s still a black box as the chain of thoughts generative process is still in a neural network. It’s just the generated content from the neural network seems to be human interpretable.

Critical Review

Whether Haomo’s DriveGPT can really perform well remains a question. Even if DriveGPT can perform well in a GPU data center in an offline setting, I think running DriveGPT on a car SoC in real time might just be too challenging, given how costly it is for running inference for generative GPT models.

FAQ

Can autonomous driving be learned end-to-end from human drivers?

Theoretically, we can. We could construct a model and train it end-to-end (from sensor to action) with the human driving sensor data and driver behavior data, even without perception annotations. However, as of the technology now, the performance of such kind of end-to-end learning has not been sufficient for production, because autonomous driving has extremely high standard for safety and learning multiple components well simultaneously end-to-end has been difficult with the existing learning algorithms. That’s why most of the autonomous driving manufacturers separate the autonomous driving solution into perception, planning, and control, and try to build each of them perfectly, and assemble them together.

This is also the case for Haomo. DriveGPT requires high quality perception signal inputs.

Can each Drive Language token be used for describing a scene?

Not likely, since a scene is too complex to describe with one token. Even if we quantize the drive space into 20 grids, each grid uses a binary value to indicate whether an obstacle is present or not. The number of possible tokens for a scene is $2^{20} = 1048576$, about one million, which is too large for the current language model to learn successfully. This has not even taken the other scene factors, such as obstacle type and size and lane type, into account.