Setting Up Environment Variables In SSH Sessions Over TCP On Runpod

Introduction

Runpod has a bug that prevents the setting of environment variables in the custom Docker container that was connected to via full SSH over TCP, which is necessary for setting up remote development environments using IDEs. This bug, however, does not exist in the same Docker container that was connected to via basic SSH.

In this blog post, I would like to quickly discuss how to work around this bug in SSH sessions over TCP on Runpod.

Setting Up Environment Variables In SSH Sessions Over TCP On Runpod

Configure Environment Variables In Templates

There are a few ways to configure environment variables in templates on Runpod, which has been described in details in the Runpod documentation “Environment variables”.

Environment Variables Are Visible In Basic SSH Sessions

When connecting to the custom Docker container via basic SSH, the environment variables configured in the template are visible in the SSH session.

For example, I configured an environment variable GIT_REPO_ACCESS_TOKEN in a template on Runpod. After a pod instance was instantiated using this template, I connected to the pod instance via basic SSH and ran the command env to list all environment variables, and I could see the configured environment variable GIT_REPO_ACCESS_TOKEN in the output.

1 | $ ssh 4hlesiqw1k88e5-644111dc@ssh.runpod.io -i ~/.ssh/id_ed25519 |

Environment Variables Are Not Visible In Full SSH Sessions Via TCP

However, when I connected to the same pod instance via full SSH over TCP, the environment variables configured in the template GIT_REPO_ACCESS_TOKEN were not visible in the SSH session. In addition, other environment variables from the original Docker container, such as PATH, were also missing.

1 | $ ssh root@69.30.85.179 -p 22174 -i ~/.ssh/id_ed25519 |

Inspiration of a Workaround

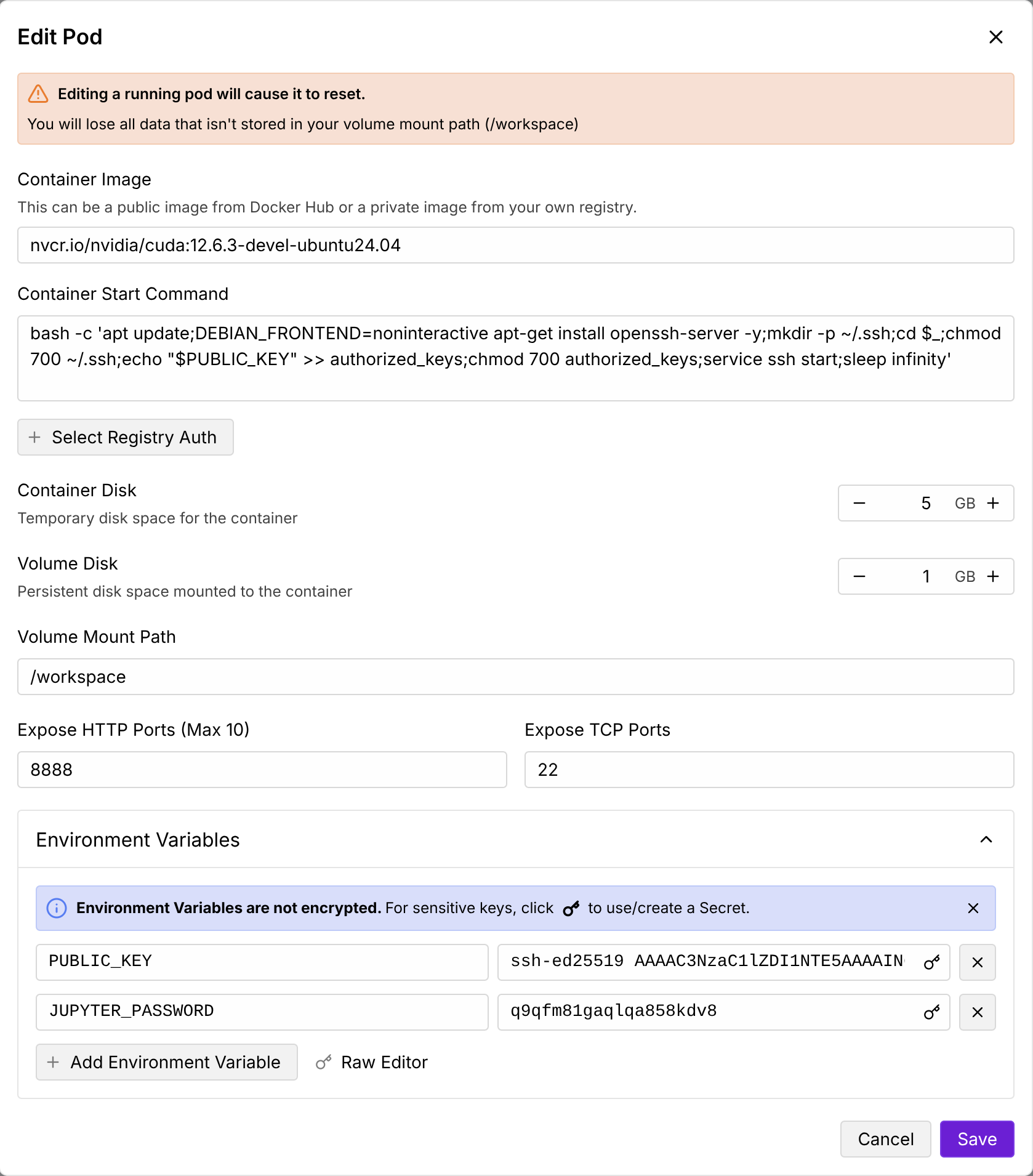

I happened to find that Runpod will add a few environment variables, including PUBLIC_KEY and JUPYTER_PASSWORD, in the template for the pod instance, even if the user does not explicitly configure these environment variables in the template. Note that these two environment variables are only visible in the basic SSH session, but not in the full SSH session via TCP.

In addition, the “Container Start Command” used for instantiating SSH daemon in the pod instance uses the environment variable PUBLIC_KEY.

1 | # Instantiating SSH daemon. |

This inspired me to think that environment variables configured in the template must be visible when pod instance is firstly instantiated. If we could save the environment variables to files when the pod instance is instantiated, later we could read the environment variables from these files in the full SSH session via TCP.

Save Environment Variables to Files When Pod Instance Is Instantiated

Instead of using the recommended “Container Start Command” for instantiating SSH daemon in the pod instance, I modified it to save the environment variables to files first, and then instantiate the SSH daemon. The modified “Container Start Command” is as follows.

1 | # Save environment variables to files. |

Formally, the one line version of the modified “Container Start Command” used for templates on Runpod is as follows.

1 | bash -c 'mkdir -p ~/.env_vars;env > ~/.env_vars/env_vars.txt;apt update;DEBIAN_FRONTEND=noninteractive apt-get install openssh-server -y;mkdir -p ~/.ssh;cd $_;chmod 700 ~/.ssh;echo "$PUBLIC_KEY" >> authorized_keys;chmod 700 authorized_keys;service ssh start;sleep infinity' |

Set Up Environment Variables In Full SSH Sessions Via TCP

Because the environment variables have been saved to files when the pod instance is instantiated, after we connect to the pod instance via full SSH over TCP, we could read the environment variables from these files and export them to the current SSH session using the following command.

1 | while IFS= read -r line; do |

References

Setting Up Environment Variables In SSH Sessions Over TCP On Runpod

https://leimao.github.io/blog/Setting-Up-Environment-Variables-SSH-Over-TCP-Runpod/