Tensor Physical Layouts on Memory

Introduction

When it comes to writing tensor operations using low-level programming languages, it is inevitable to understand how tensor layouts on memory. I have to admit that previously I had worked on high-level APIs too much and had never tried to think and understand how those tensors work at the memory level.

Most commonly we would see tensor format options, including NCHW and NHWC, when you implement convolutional layers in TensorFlow. By default, TensorFlow uses NHWC format, and most of the image loading APIs, such as OpenCV, are using HWC format. It is naturally compatible with TensorFlow to most of the image loading APIs, so I have been using NHWC format brainlessly for a long while. I believe I had also seen that NCHW is more efficient compared to NHWC when cuDNN is used somewhere. However, I did not focus on optimizing performance when I was a student, I never gave NCHW a shit Orz.

Recently I became interested in the tensor layouts on memory. But when I started to think about the difference between NHWC and NCHW formats, I sometimes got confused. So I checked some references and documented some key points below.

Tensor Layouts on Memory

One fact, which I did not realize previously, is that NCHW format is the natural way we would think about image or images. When you have an RGB image, you would always like to separate the image into three channels first. That is exactly how NCHW format works.

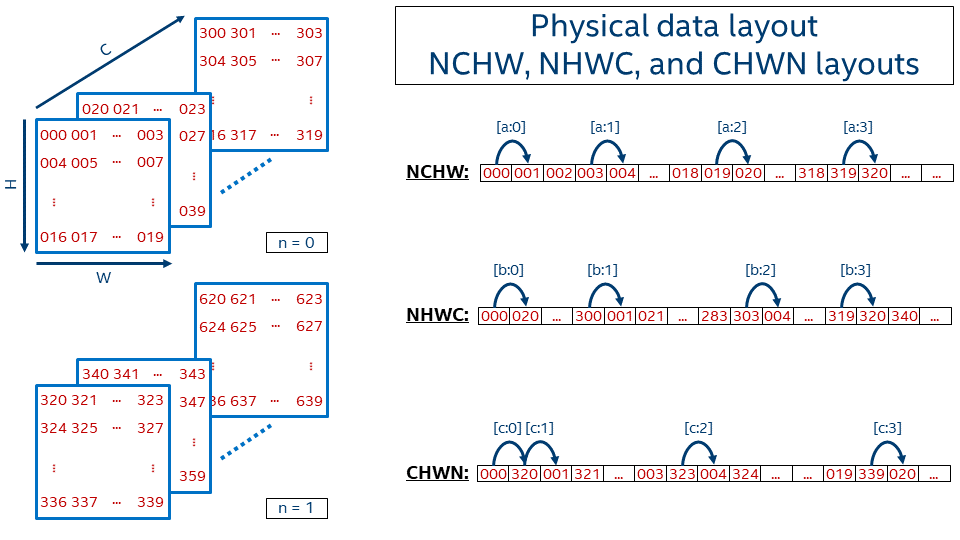

Regarding the physical layouts on memory, at least the Intel MKL-DNN implementation and the TensorFlow implementation are the same. It is very intuitive by just looking at the following image that I copied from the Intel MKL-DNN website. You would also find NCHW stores “naturally” on memory.

The NCHW tensor memory layouts for cuDNN is also the same and could be found from the cuDNN developer guide.

References

Tensor Physical Layouts on Memory

https://leimao.github.io/blog/Tensor-Physical-Layout-on-Memory/