CycleGAN Image Converter

Introduction

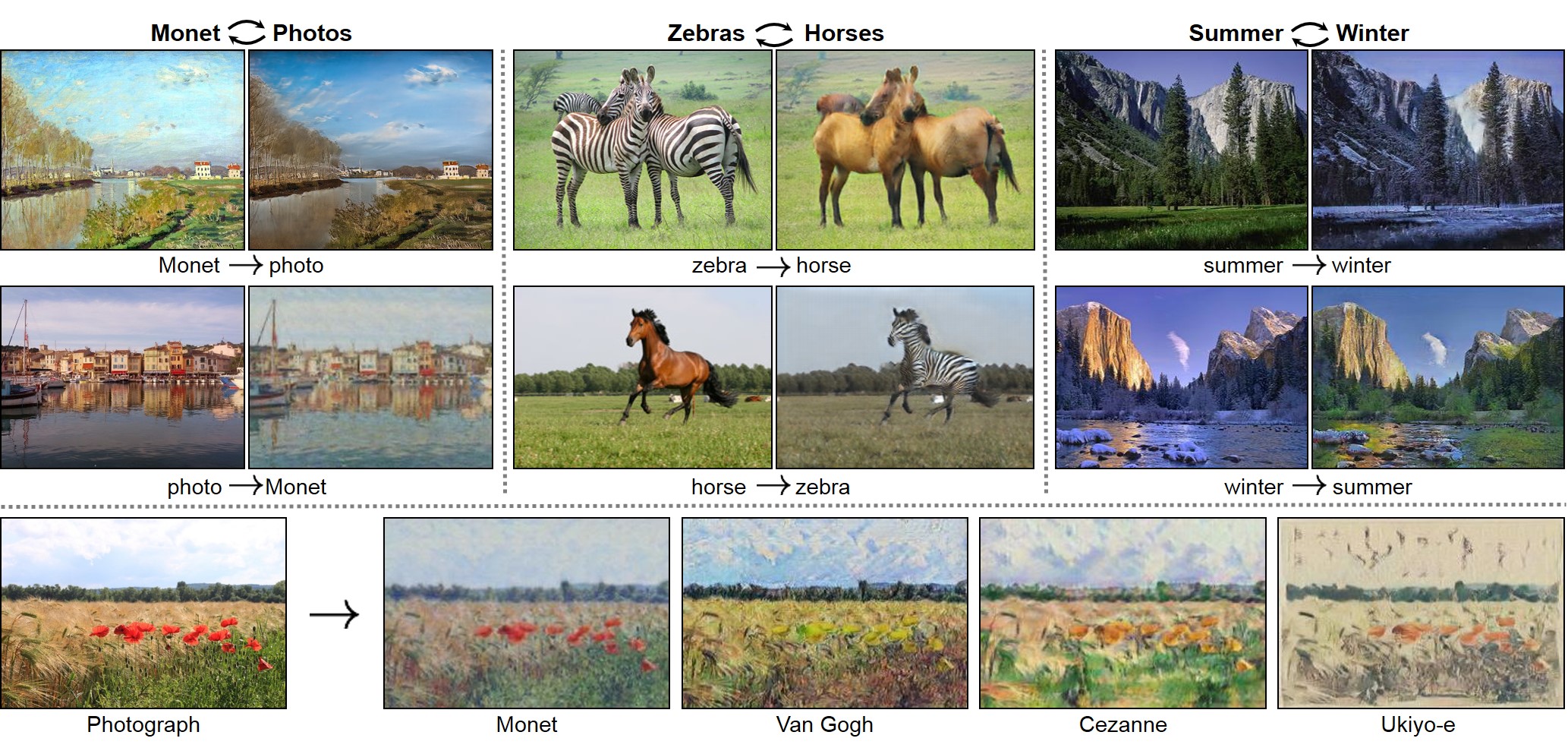

Unlike ordinary pixel-to-pixel translation models, cycle-consistent adversarial networks (CycleGAN) has been proved to be useful for image translations without using paired data. This is a reproduced implementation of CycleGAN for image translations, but it is more compact.

Dependencies

- Python 3.5

- Numpy 1.14

- TensorFlow 1.8

- ProgressBar2 3.37.1

- OpenCV 3.4

Files

1 | . |

Usage

Download Dataset

Download and unzip specified dataset to designated directories.

1 | $ python download.py --help |

For example, to download apple2orange and horse2zebra datasets to download directory and extract to data directory:

1 | $ python download.py --download_dir ./download --data_dir ./data --datasets apple2orange horse2zebra |

Train Model

To have a good conversion capability, the training would take at least 100 epochs, which could take very long time even using an NVIDIA GTX TITAN X graphic card. The model also consumes a lot of graphic card memories. But this could be reduced by reducing the number of convolution filters num_filters in the model.

1 | $ python train.py --help |

For example, to train CycleGAN model for horse2zebra dataset:

1 | $ python train.py --img_A_dir ./data/horse2zebra/trainA --img_B_dir ./data/horse2zebra/trainB --model_dir ./model/horse_zebra --model_name horse_zebra.ckpt --random_seed 0 --validation_A_dir ./data/horse2zebra/testA --validation_B_dir ./data/horse2zebra/testB --output_dir ./validation_output |

With validation_A_dir, validation_B_dir, and output_dir set, we could monitor the conversion of validation images after each epoch using our bare eye.

Image Conversion

Convert images using pre-trained models.

1 | $ python convert.py --help |

To convert images, put images into img_dir and run the following commands in the terminal, the converted images would be saved in the output_dir:

1 | $ python convert.py --model_filepath ./model/horse_zebra/horse_zebra.ckpt --img_dir ./data/horse2zebra/testA --conversion_direction A2B --output_dir ./converted_images |

The convention for conversion_direction is first object in the model file name is A, and the second object in the model file name is B. In this case, horse = A and zebra = B.

Demo

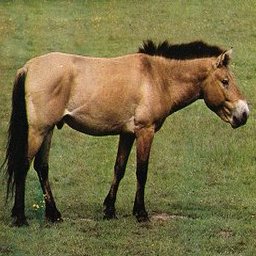

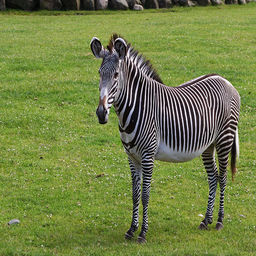

Horse and Zebra Conversion

The horse and zebra conversion model was trained for more than 500 epochs, and some of the selected test images were presented below.

| Horse | Horse to Zebra | Zebra | Zebra to Horse |

|---|---|---|---|

|

|

|

|

It should be noted that the above presented conversions look extremely well. However, for most of the test images, the conversions always have defects which are visually pickable. Most horses or zebras that have “abnormal” poses does not convert well. The conversion of zebra to horse looks worse than the conversion of horse to zebra. I think one of the reasons that the conversions have defects is that the dataset is small and it does not contain all the poses for horse and zebra.

The test images after 200 epochs does not look too much different from the test images after 500 epochs, although the training loss kept decreasing. To further optimize the training, I think it might be worthy of trying learning rate decay and cycle loss weight decay.

Download the pre-trained horse-zebra conversion model from Google Drive.

Yosemite Summer and Winter Conversion

The Yosemite summer and winter conversion model was trained for more than 200 epochs, and some of the selected test images were presented below.

| Summer | Summer to Winter | Winter | Winter to Summer |

|---|---|---|---|

|

|

|

|

Most of the conversions works pretty well, except that the snows could not be converted probably because there is no objects that could be mapped to snow.

Download the pre-trained Yosemite summer-winter conversion model from Google Drive.

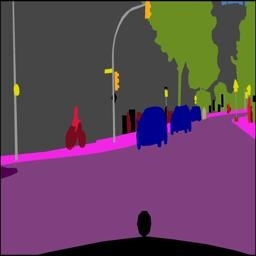

Cityscapes Vision and Semantic Conversion

The Cityscapes vision and semantic conversion model was trained for more than 150 epochs, and some of the selected test images were presented below.

| Vision | Vision to Semantic | Semantic | Semantic to Vision |

|---|---|---|---|

|

|

|

|

The conversion from vision to semantic would usually leads to unexist objects or the object position has been moved in the semantic views, suggesting that CycleGAN, probably even GAN, is not a good technique to do semantic labelings. The conversion from semantic to vision failed to provide details of the object.

Download the pre-trained Cityscapes vision-semantic conversion model from Google Drive.

References

- Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. 2017.

- Xiaowei Hu’s CycleGAN TensorFlow Implementation Repository

- Hardik Bansal’s CycleGAN TensorFlow Implementation Repository

GitHub

CycleGAN Image Converter