Gradient Boosting Machine

Introduction

Many people, including me, have been using gradient boosting machine (GBM), one of the most popular and powerful machine learning algorithms, to solve machine learning problems without knowing exactly what is going on underneath.

In this article, I will elucidate all the mathematics behind without leaving anything ambiguous.

Problem Definition

Most of the machine learning problems, including classification and regression problems, could be modeled as

$$

\begin{align}

F^{\ast} &= \operatorname*{argmin}_{F} \mathbb{E}_{(\mathbf{x},y) \sim P(\mathbf{x},y)}[L(y,F(\mathbf{x}))] \\

&= \operatorname*{argmin}_{F} \mathbb{E}_{\mathbf{x} \sim P(\mathbf{x})} \big[ \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x} \big]

\end{align}

$$

where $\mathbf{x}$ and $y$ are features and label respectively, $L$ is the loss function, $F$ is a function which represents the model, and $F^{\ast}$ is the optimized function.

Gradient Descent

Parameter Space

Suppose $F$ is a parameterized model with parameters $\mathbf{P}$, $F(\mathbf{x};\mathbf{P})$, our optimization goal becomes equivalent to

$$

\begin{align}

\mathbf{P}^{\ast} = \operatorname*{argmin}_{\mathbf{P}} \Phi(\mathbf{P})

\end{align}

$$

where

$$

\begin{align}

\Phi(\mathbf{P}) = \mathbb{E}_{(\mathbf{x},y) \sim P(\mathbf{x},y)}[L(y,F(\mathbf{x};\mathbf{P}))]

\end{align}

$$

and the optimal model is

$$

\begin{align}

F^{\ast}(\mathbf{x}) = F(\mathbf{x};\mathbf{P}^{\ast})

\end{align}

$$

We apply gradient descent to optimize the parameters $\mathbf{P}$. Suppose at time step $t-1$, the value of the parameters are $\mathbf{P}_{t-1}$. The gradient of the parameters, $\mathbf{g}_t$, is computed as follows.

$$

\begin{align}

\mathbf{g}_{t} &= \{g_{jt}\} \\

&= \Bigg\{ \bigg[ \frac{\partial}{\partial P_j} \Phi(\mathbf{P}) \bigg] \Biggr\rvert_{\mathbf{P} = \mathbf{P}_{t-1}} \Bigg\} \\

\end{align}

$$

where $j$ is the index of the parameter in the model.

The updated parameters at time step $t$ will become

$$

\begin{align}

\mathbf{P}_{t} = \mathbf{P}_{t-1} + \mathbf{p}_{t-1}

\end{align}

$$

where

$$

\begin{align}

\mathbf{p}_{t-1} = -{\rho}_{t}\mathbf{g}_{t}

\end{align}

$$

Here ${\rho}_{t}$ is a scalar. Usually in machine learning, ${\rho}_{t}$ is arbitrary and is not optimal. To reach good optimization performance, we do linear search for ${\rho}_{t}$, i.e.,

$$

\begin{align}

{\rho}_{t} &= \operatorname*{argmin}_{\rho} \Phi(\mathbf{P}_{t-1} - \rho \mathbf{g}_{t})

\end{align}

$$

Because ${\rho}_{t}$ is a scalar, so it could not make all the parameters in $\mathbf{P}$ to reach optimal values in one step. To optimize the parameters, we would have to do multiple steps to update. If we unroll the time-series updates, we would have

$$

\begin{align}

\mathbf{P}_{t} = \mathbf{P}_{0} + \sum_{i=0}^{t-1} \mathbf{p}_{i}

\end{align}

$$

where $\mathbf{P}_{0}$ is the initialized parameters at time step $0$.

Function Space

In stead of doing optimization in parameter space, we could also do optimization in function space. We consider $F(\mathbf{x})$, i.e., the value of function $F$ evaluated at point $\mathbf{x}$, as the parameter to optimize.

Our objective function is

$$

\begin{align}

{\Phi}(F) &= \mathbb{E}_{(\mathbf{x},y) \sim P(\mathbf{x},y)}[L(y,F(\mathbf{x}))] \\

&= \mathbb{E}_{\mathbf{x} \sim P(\mathbf{x})} \big[ \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x} \big] \\

&= \int\limits_{\mathbf{x}} p(\mathbf{x}) \big[ \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x} \big] d\mathbf{x} \\

\end{align}

$$

and we define

$$

\begin{align}

{\phi}(F(\mathbf{x})) = \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x}

\end{align}

$$

We assume function $F$ has no constrains, i.e., for any $\mathbf{x}$, $F(\mathbf{x})$ could be any value. Minimizing the above objective function is equivalent to minimizing ${\phi}(F(\mathbf{x}))$ for each $\mathbf{x}$ in the integral interval. Usually, there are infinite number of $\mathbf{x}$, and it is impossible to minimize ${\phi}(F(\mathbf{x}))$ for all $\mathbf{x}$. However, in practice, our training data is finite, so we could do this.

Suppose our training set consists of $N$ data points ${ \mathbf{x} ,y }_{1}^{N}$, the function $F$ could be represented as a discrete function

$$

\begin{align}

F = F(\mathbf{x}), x \in {\{\mathbf{x}_{i}\}}_{1}^{N}

\end{align}

$$

if $\mathbf{x}$ does not exist in the training data, $F(\mathbf{x})$ is undefined and it could be any value. Also note that $F$ does not have to be differentiable with respect to $\mathbf{x}$.

For each $\mathbf{x}$ from the training set, We compute the derivative of ${\phi}(F(\mathbf{x}))$ with respect to $F(\mathbf{x})$,

$$

\begin{align}

{g}(\mathbf{x}) &= \frac{\partial}{\partial F(\mathbf{x})} {\phi}(F(\mathbf{x})) \\

&= \frac{\partial}{\partial F(\mathbf{x})} \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x}

\end{align}

$$

So similarly,

$$

\begin{align}

g = {g}(\mathbf{x}), x \in {\{\mathbf{x}_{i}\}}_{1}^{N} \label{eq1}

\end{align}

$$

We apply gradient descent to optimize $F$. Suppose at time step $t-1$, the value of the parameters are $F_{t-1}$. The gradient of the parameters, $g_t$, is computed as follows.

$$

\begin{align}

F_{t-1} = {F}_{t-1}(\mathbf{x}), x \in {\{\mathbf{x}_{i}\}}_{1}^{N}

\end{align}

$$

$$

\begin{align}

g_{t} &= {g}_{t}(\mathbf{x}) \\

&= \bigg[ \frac{\partial}{\partial F(\mathbf{x})} {\phi}(F(\mathbf{x})) \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

&= \bigg[ \frac{\partial}{\partial F(\mathbf{x})} \mathbb{E}_{y \sim P(y | \mathbf{x})}[L(y,F(\mathbf{x}))] \rvert \mathbf{x} \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})}

\end{align}

$$

$$

\begin{align}

F_{t}(\mathbf{x}) = F_{t-1}(\mathbf{x}) + f_{t}(\mathbf{x})

\end{align}

$$

where

$$

\begin{align}

f_{t}(\mathbf{x}) = -{\rho}_{t} g_{t}(\mathbf{x})

\end{align}

$$

So the updated function $F$ becomes

$$

\begin{align}

F_{t}(\mathbf{x}) &= F_{t-1}(\mathbf{x}) + f_{t}(\mathbf{x}) \\

&= F_{t-1}(\mathbf{x}) -{\rho}_{t} g_{t}(\mathbf{x})

\end{align}

$$

Similarly, the value of ${\rho}_{t}$ is determined by linear search,

$$

\begin{align}

{\rho}_{t} &= \operatorname*{argmin}_{\rho} \Phi(F_{t-1} -{\rho} g_{t}) \\

&= \operatorname*{argmin}_{\rho} \mathbb{E}_{(\mathbf{x},y) \sim P(\mathbf{x},y)}[L(y,F_{t-1}(\mathbf{x}) - \rho g_t(\mathbf{x}))] \\

&= \operatorname*{argmin}_{\rho} \sum_{i=1}^{N} L(y_i,F_{t-1}(\mathbf{x}_i) - \rho g_t(\mathbf{x}_i))

\end{align}

$$

Note that because each data point $\mathbf{x}_i$ in the dataset only has one label $y_i$, i.e., $P(y_i | \mathbf{x}_i) = 1$,

$$

\begin{align}

g_t(\mathbf{x}_i) &= \bigg[ \frac{\partial}{\partial F(\mathbf{x}_i)} \mathbb{E}_{y \sim P(y | \mathbf{x}_i)}[L(y,F(\mathbf{x}_i))] \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

&= \bigg[ \frac{\partial}{\partial F(\mathbf{x}_i)} L(y_i,F(\mathbf{x}_i)) \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})}

\end{align}

$$

The most frequently used loss function for regression is L2 loss.

$$

\begin{align}

L(y_i,F(\mathbf{x}_i)) = \frac{1}{2}(y_i - F(\mathbf{x}_i))^2

\end{align}

$$

The $g_t(\mathbf{x}_i)$ expression for L2 loss becomes

$$

\begin{align}

g_t(\mathbf{x}_i) &= \bigg[ \frac{\partial}{\partial F(\mathbf{x}_i)} L(y_i,F(\mathbf{x}_i)) \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

&= \bigg[ \frac{\partial}{\partial F(\mathbf{x}_i)} \frac{1}{2}(y_i - F(\mathbf{x}_i))^2 \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

&= \bigg[ (y_i - F(\mathbf{x}_i))(0-1) \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

&= \bigg[ F(\mathbf{x}_i) - y_i \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})} \\

\end{align}

$$

Gradient Boosting Machine

Theory

As we have pointed out, the optimization in functional space directly tunes the value of $F(\mathbf{x})$, and the optimization for $F(\mathbf{x})$ does not dependent on the training data points other than $\mathbf{x}$. So $F$ would be very likely not to generalize well to the data points that have not been shown in the training set.

If we could impose smoothness to $F$ by introducing nearby data points when tuning the value of $F(\mathbf{x})$, $F$ is likely to generalize better.

So instead of updating $F$ using discrete function $g$ which has no smoothness at all, we use another smooth function $h$ such that $h(\mathbf{x})$ is close to $g(\mathbf{x})$ for $x \in {\{\mathbf{x}_{i}\}}_{1}^{N}$. In order to make $h$ smooth, we need to use all the data points from training set ${\{\mathbf{x}_{i}\}}_{1}^{N}$ to fit the parameters for $h$.

Concretely, we create a new model $h(\mathbf{x}; \mathbf{a}_t)$ at each time step $t$. The update of function $F$ becomes

$$

\begin{align}

F_t(\mathbf{x}) = F_{t-1}(\mathbf{x}) + \rho_t h(\mathbf{x}; \mathbf{a}_t)

\end{align}

$$

Because $h(\mathbf{x}; \mathbf{a}_t)$ is a smoothing version of $-g_{t}(\mathbf{x})$, therefore, we use $(\mathbf{x}_i, g_{t}(\mathbf{x}_i))$, $x \in {\{\mathbf{x}_{i}\}}_{1}^{N}$, as the train set to fit $h(\mathbf{x}; \mathbf{a}_t)$ using L2 loss.

$$

\begin{align}

\mathbf{a}_t = \operatorname*{argmin}_{\mathbf{a}, \beta} \sum_{i=1}^{N} [-g_t(\mathbf{x}_i) - \beta h(\mathbf{x}_i; \mathbf{a}_t)]^2

\end{align}

$$

Note that $h(\mathbf{x}; \mathbf{a}_t)$ is parameterized and smoothed by fitting using all data points from the train set. Sometimes, $-g_t(\mathbf{x}_i)$ is also denoted as $\tilde{y}_i$, and $\tilde{y}_i$ is called “pseudo-response”.

$$

\begin{align}

\tilde{y}_i &= -g_t(\mathbf{x}_i) \\

&= - \bigg[ \frac{\partial}{\partial F(\mathbf{x}_i)} L(y_i,F(\mathbf{x}_i)) \bigg]_{F(\mathbf{x}) = F_{t-1}(\mathbf{x})}

\end{align}

$$

The constant $\rho_t$ could be computed using linear search.

$$

\begin{align}

{\rho}_{t} &= \operatorname*{argmin}_{\rho} \sum_{i=1}^{N} L(y_i,F_{t-1}(\mathbf{x}_i) + \rho h(\mathbf{x}_i; \mathbf{a}_t))

\end{align}

$$

Therefore, gradient boosting machine is nothing special but using a parametric smoothing function $h(\mathbf{x}_i; \mathbf{a}_t))$ to replace the discrete non-parametric gradient function $g_t(\mathbf{x})$ during the machine learning optimization in the function space. In each time step, we introduce a new model, which is called “boost”.

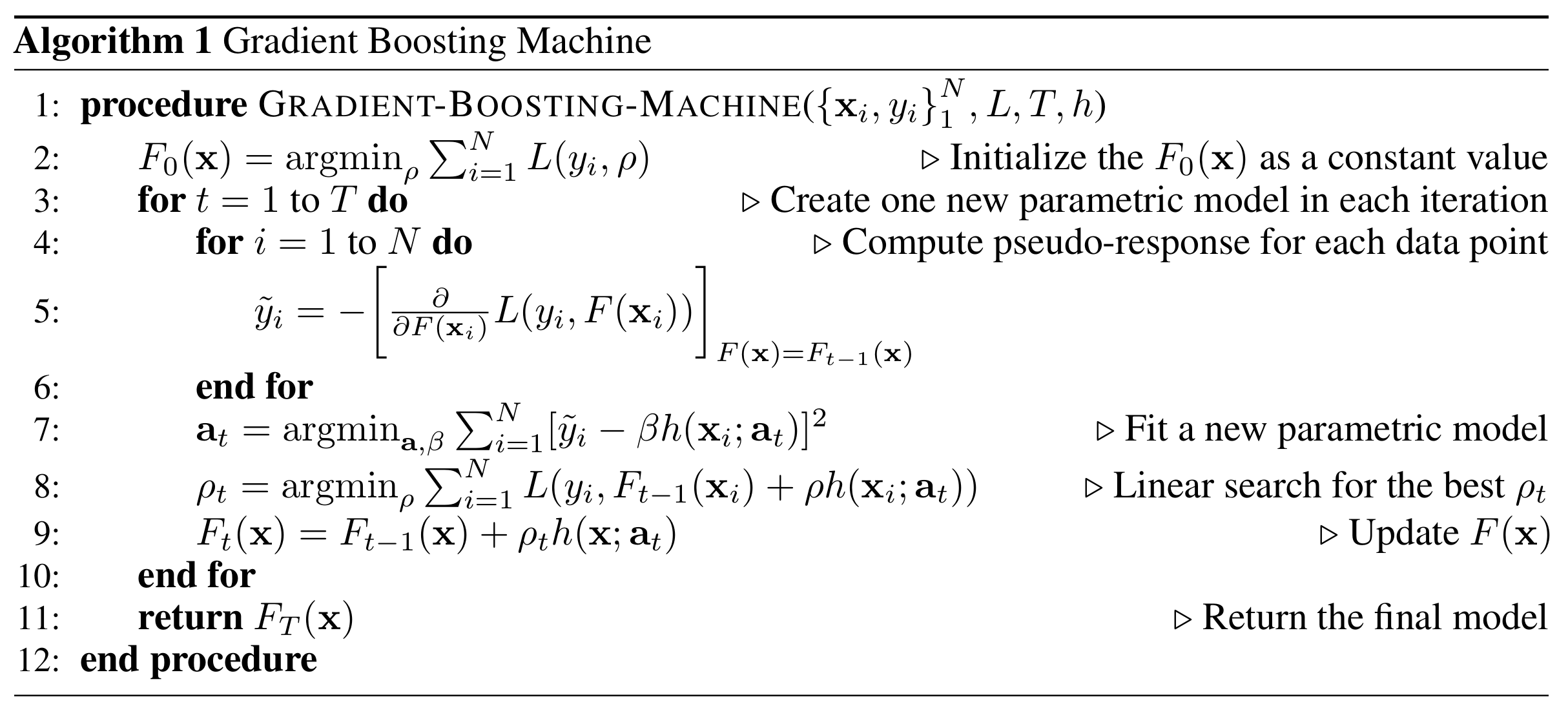

Algorithm

References

Gradient Boosting Machine