Autonomous Driving Trinity: Vision, Natural Language, and Action

Introduction

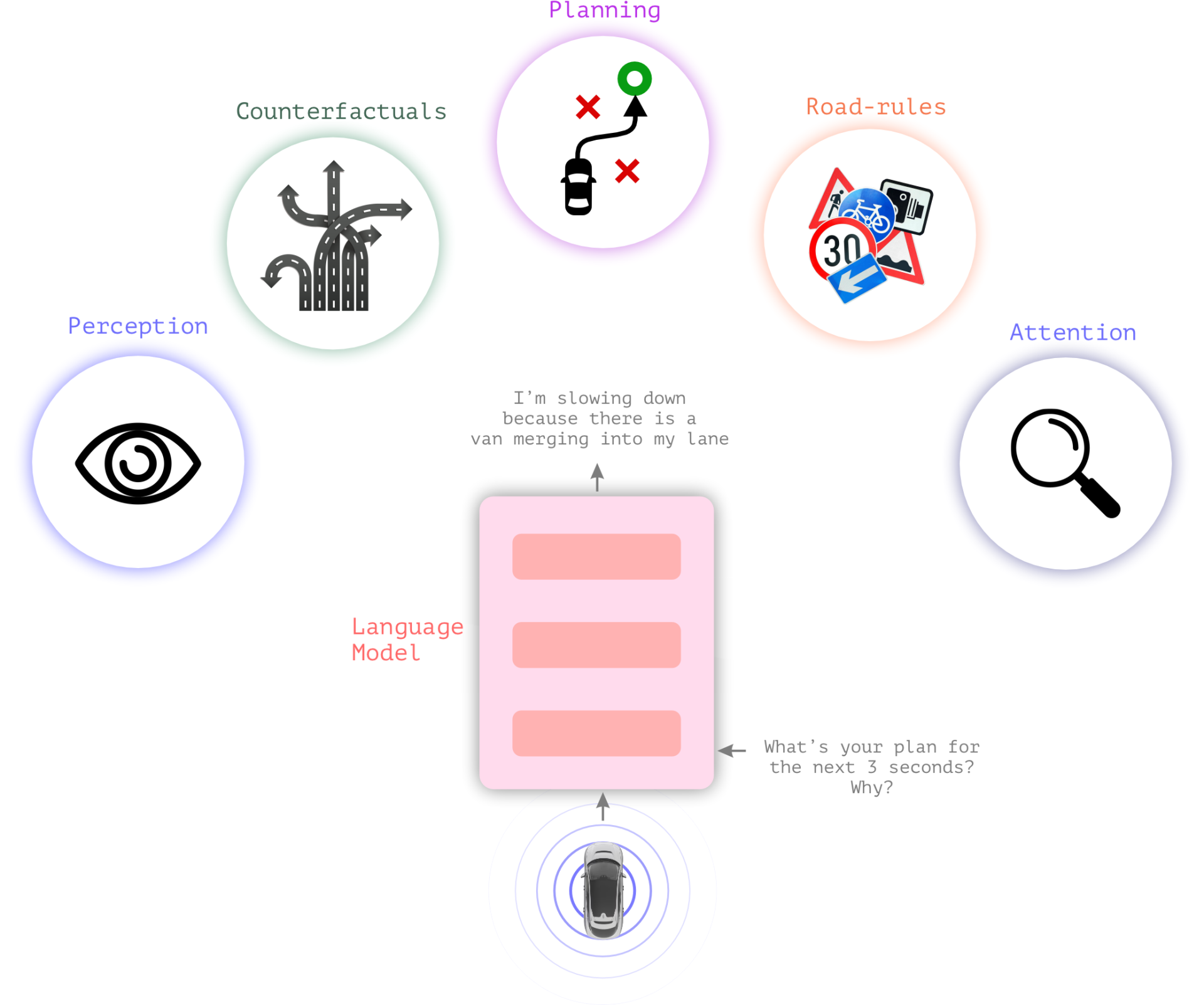

Recently, I came across a research blog post “LINGO-1: Exploring Natural Language for Autonomous Driving” from Wayve. Wayve introduced LINGO-1, a driving-specific visual question answering (VQA) model on tasks such as perception, counterfactuals, planning, reasoning and attention which can help interpreting the autonomous driving model behavior.

While visual question answering is not something new and fancy anymore, and generating driving planning and human-interpretable reasoning from driving scenes has also been done in other models previously such as the DriveGPT model from Haomo, what’s interesting in the blog post was their future plan of integrating the LINGO’s natural language, reasoning and planning capabilities into a closed-loop driving models to enhance driving performance, safety, and interpretability. In my opinion, the vision, natural language, and action from a closed-loop driving model constitute the trinity of the future autonomous driving.

In this blog post, I would like to quickly discuss the “autonomous driving trinity”.

LINGO-1, An Open-Loop Driving Commentator

The Wayve LINGO-1 VQA model has not too much fancy. Given a vision scene or a sequence of vision scenes, and a natural language question from the user, the VQA model is able to answer questions related to perception, planning, reasoning, etc.

This can be achieved by training the VQA model on an extensive dataset of human driving scenarios, annotated with carefully crafted natural language questions and their corresponding human-generated answers and descriptions.

Autonomous Driving Trinity: Vision, Natural Language, and Action

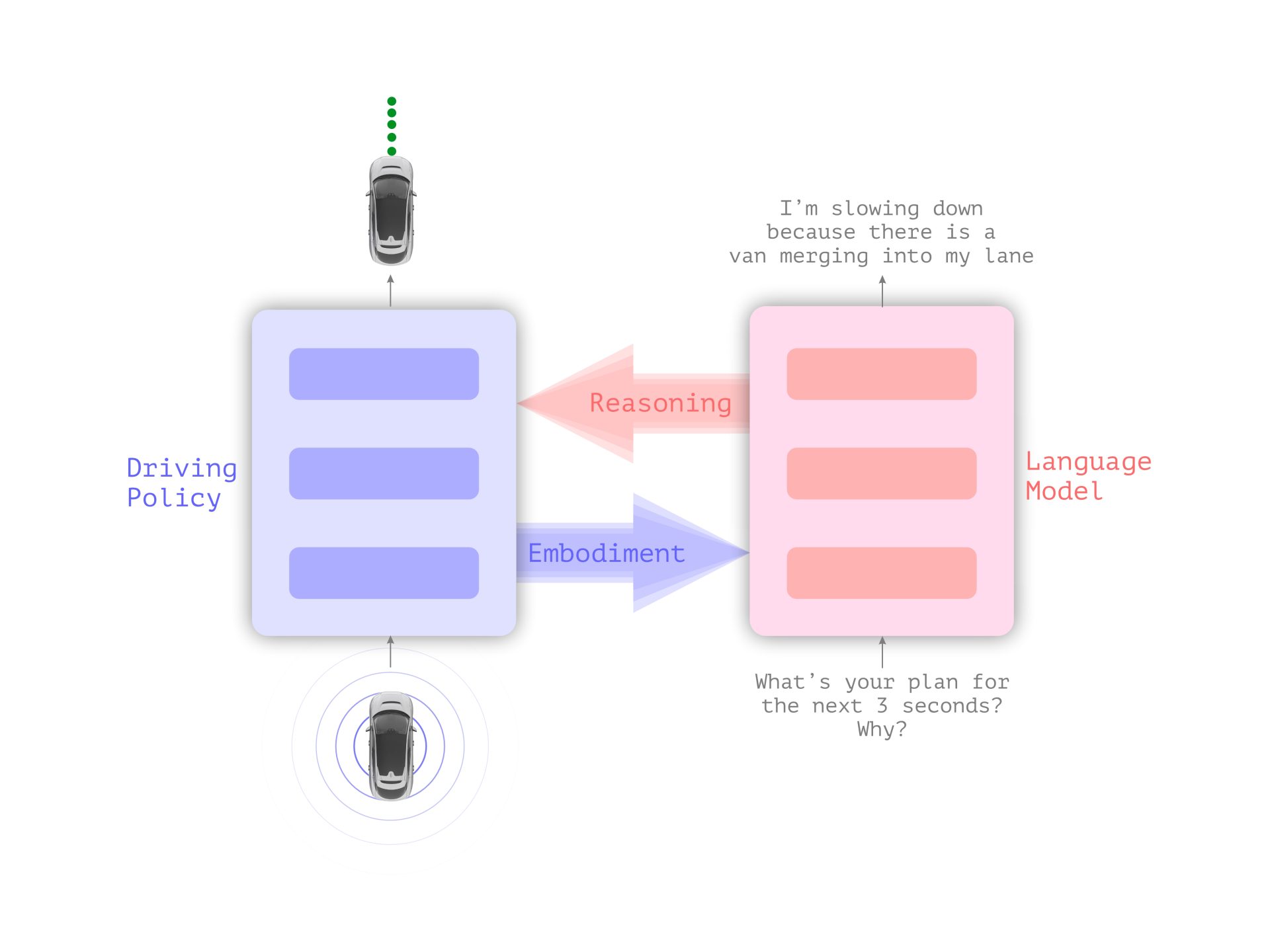

Wayve’s approach of integrating LINGO’s natural language, reasoning and planning capabilities to a closed-loop driving model is illustrated below.

At first glance, this concept may seem reminiscent of the DriveGPT model by Haomo, where the current and past perceptual information informs future driving plans, along with the reasoning behind them. What DriveGPT previously did not show was whether the reasoning was looping into the decision-making, i.e., whether they have the red arrow “Reasoning” going into the “Driving Policy” module.

In Wayve’s close-loop driving model proposal, the reasoning becomes an explicit factor for decision-making. If the reasoning is human interpretable, the closed-loop driving model interpretability can be substantially improved.

For example, when the car goes into the downtown where there are lots of surrounding cars, the natural language model should describe the scenes to be very crowded and suggest slowing down. This reasoning should affect the decision-making.

To train such a close-loop driving model, we need lots of data, including the human driving scenes and actions, good annotated human-interpretable perception embodiment for the scenes, human natural language instructions and feedbacks for the driving. By leveraging vision, natural language, and action data in tandem, we may be able to develop a more capable and human-interpretable autonomous driving model.

References

Autonomous Driving Trinity: Vision, Natural Language, and Action