CUDA Compatibility

Introduction

Creating portable CUDA application and libraries that works for a variety of NVIDIA platforms and software environments is sometimes important. NVIDIA offers CUDA compatibilities at different levels.

In this blog post, I would like to discuss CUDA forward and backward compatibilities from the perspectives of CUDA application or library compatibility (with the GPU architecture), CUDA Runtime compatibility (with the CUDA application or library), and CUDA Driver compatibility (with the CUDA Runtime library).

CUDA Application Compatibility

For simplicity, let’s first assume our CUDA application or library has no dependency on other CUDA libraries, such as cuDNN, cuBLAS, etc. If we have a computer that has an NVIDIA GPU of an old architecture, we want to build a CUDA application or library that also runs on a computer that has an NVIDIA GPU of a new architecture or something even more future. NVCC compilation can generate PTX code when we build the CUDA application or library on the computer has an NVIDIA GPU of an old architecture as part of the compiled binary file. On the computer that has the NVIDIA GPU of the new architecture, when the CUDA application or library is executed, the PTX code will be JIT compiled to binaries for the new architecture by CUDA Runtime, therefore the application or software built on the computer has an NVIDIA GPU of an old architecture can be forward compatible on a computer has an NVIDIA GPU of a new architecture.

Of course, the drawback of being forward compatible is that the PTX code generated for the old architecture cannot take the advantage of the new feature of the new architecture which potentially will bring a large performance gain. We will not discuss performance in this article as compatibility is what we want to achieve.

On the contrary, if we have a computer that has an NVIDIA GPU of a new architecture, we want to build a CUDA application or library that also runs on a computer that has an NVIDIA GPU of an old architecture. NVCC compilation allows us to specify not only the PTX code but also the compiled binary generation for an old architecture. On the computer that has the NVIDIA GPU of the old architecture, when the CUDA application or library is executed, it will be executed directly if it has been compiled to binaries for the architecture, or the PTX code will be JIT compiled to binaries for the old architecture by CUDA Runtime, therefore the application or software built on the computer has an NVIDIA GPU of a new architecture can be backward compatible on a computer has an NVIDIA GPU of an old architecture.

The forward compatibility can be disabled by disabling the generation of PTX code as part of the binary file. The backward compatibility can be disabled by disabling the generation of PTX code and old architecture specific binaries as part of the binary file.

Now, if our CUDA application or library has dependencies on other CUDA libraries, such as cuDNN, cuBLAS, in order to archive the forward or backward compatibilities, those CUDA libraries should also be built to have the same forward or backward compatibilities as our CUDA application or library. However, this is sometimes not the case, which makes it impossible for our CUDA application or library being forward or backward compatible. The application or developer should always check carefully about the dependency library compatibilities beforehand.

CUDA Runtime Compatibility

CUDA Runtime library is a library that CUDA applications or libraries will always have to link to, sometimes without having to specify explicitly, during building in most cases. The exceptions are there are CUDA applications or libraries that link to CUDA Driver library. Therefore, for released CUDA software, such as cuDNN, it will always mention the version of the CUDA (Runtime library) it links to. Sometimes, there can be multiple builds for different versions of CUDA Runtime libraries as well. So the CUDA application compatibility also depends on CUDA Runtime.

However, there are sometimes scenarios where the CUDA Runtime that our CUDA application or library links to at build time is different from the CUDA Runtime library in the execution environment. To address these problems, CUDA Runtime library provides minor version (forward and/or backward) compatibilities, provided that the NVIDIA Driver requirement is satisfied.

The reason why CUDA explicitly mention the compatibility is minor version compatibility is, because CUDA Runtime API might be different between different major versions, the application or library that uses those APIs and builds against the CUDA Runtime of one major version might not be able to run with a CUDA Runtime of another major version. For example, cuDNN 8.6 for CUDA 10.2 cannot be wrong with CUDA 11.2. In fact, if we check the linked shared libraries of a CUDA application or library using ldd, we will often see that the CUDA Runtime library major version and no minor version is specified. For CUDA Runtime libraries that differs in minor versions, the CUDA Runtime APIs are usually the same, therefore, archiving minor version compatibility becomes possible.

CUDA Driver Compatibility

CUDA Runtime library is a library that builds application components before run, and CUDA Driver library is a library that actually runs the application. So the CUDA Runtime compatibility also depends on CUDA Driver. Although each version of the CUDA Toolkit releases ships both CUDA Runtime library and CUDA Driver library that are compatible with each other, they can come from different sources and be installed separately.

CUDA Driver library is always backward compatible. Using the latest driver allows us to run CUDA applications with old CUDA Runtime libraries. The CUDA Driver library forward compatibility, which is sometimes required from data center computers that focus on stability, is more complicated and requires installing additional libraries. We will not elaborate too much on this in this article.

NVIDIA Docker

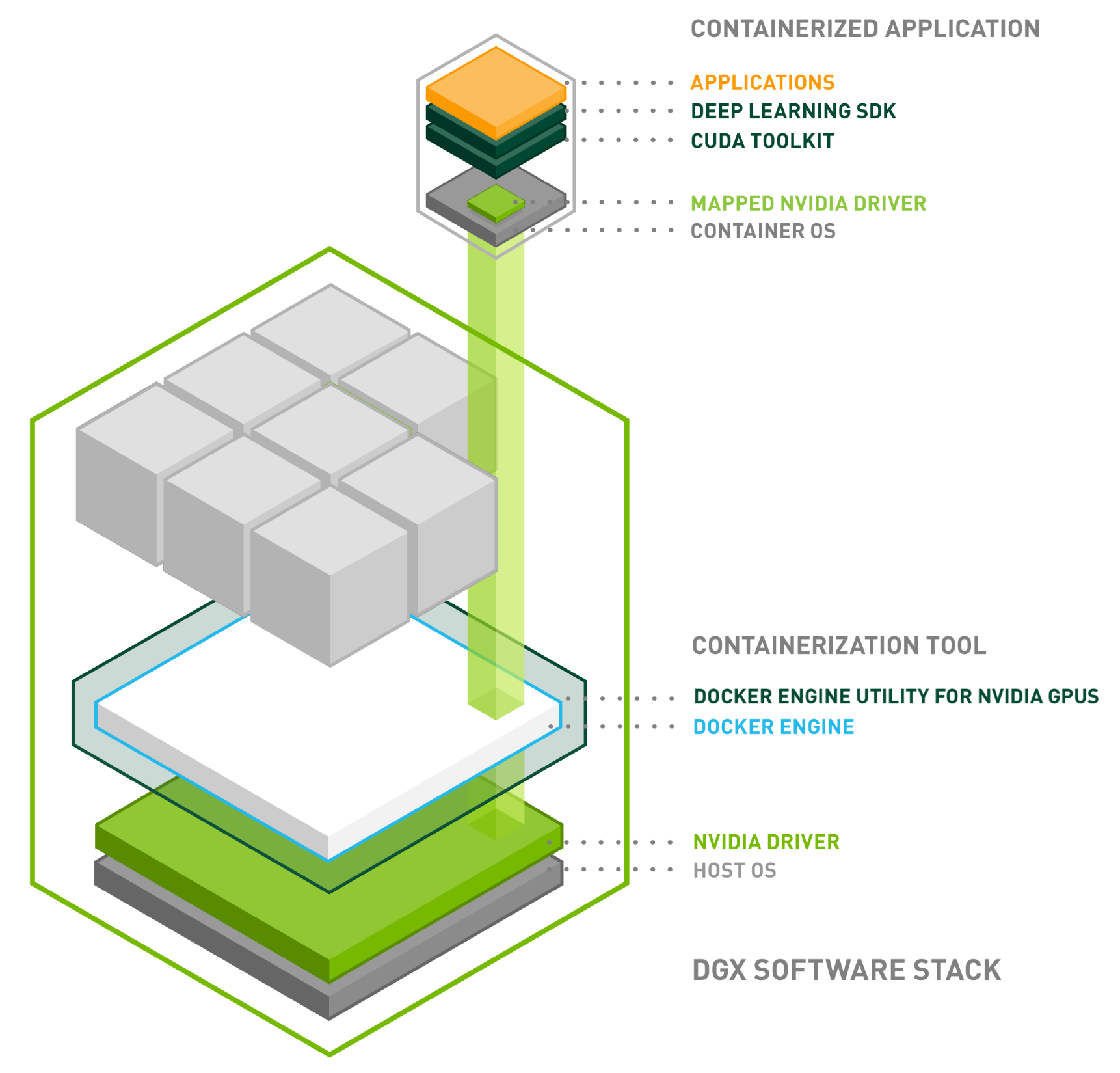

NVIDIA Docker is a convenient tool that allows the user to develop and deploy CUDA applications in a portable, reproducible and scalable way. With NVIDIA Docker, we could run build and run any CUDA applications in any CUDA environment we want, provided that the Driver and GPU architecture requirements are satisfied.

From the above diagram, we could see that the CUDA Driver library is from the host operating system, sits under the Docker engine, and is mapped to the Docker container that installs a CUDA Runtime library of any version. The entire CUDA application design and run flow follows “Application or Library -> CUDA Runtime (Library) -> CUDA Driver (Library) -> NVIDIA GPU”. Fundamentally, it is the CUDA Driver backward compatibility that allows us to run almost any CUDA Runtime library inside the Docker container, provided that the CUDA Driver library from host is always up-to-date.

References

CUDA Compatibility