CUDA Compilation

Introduction

To get the best performance or compatibility out of the CUDA program, sometimes it is necessary to understand how CUDA compilation works.

In this blog post, I would like to quickly talk about a couple of CUDA key compilation files and how a CUDA program is compiled at a very high level, assuming the readers already have some knowledge of C++ compilation.

CUDA Key Compilation Files

In addition to the files that are common for C++ program compilation, such as static library file, shared library file, object file, there are three additional key files that are sort of specific to CUDA program compilation, including PTX file, CUBIN file, and FATBIN file.

PTX

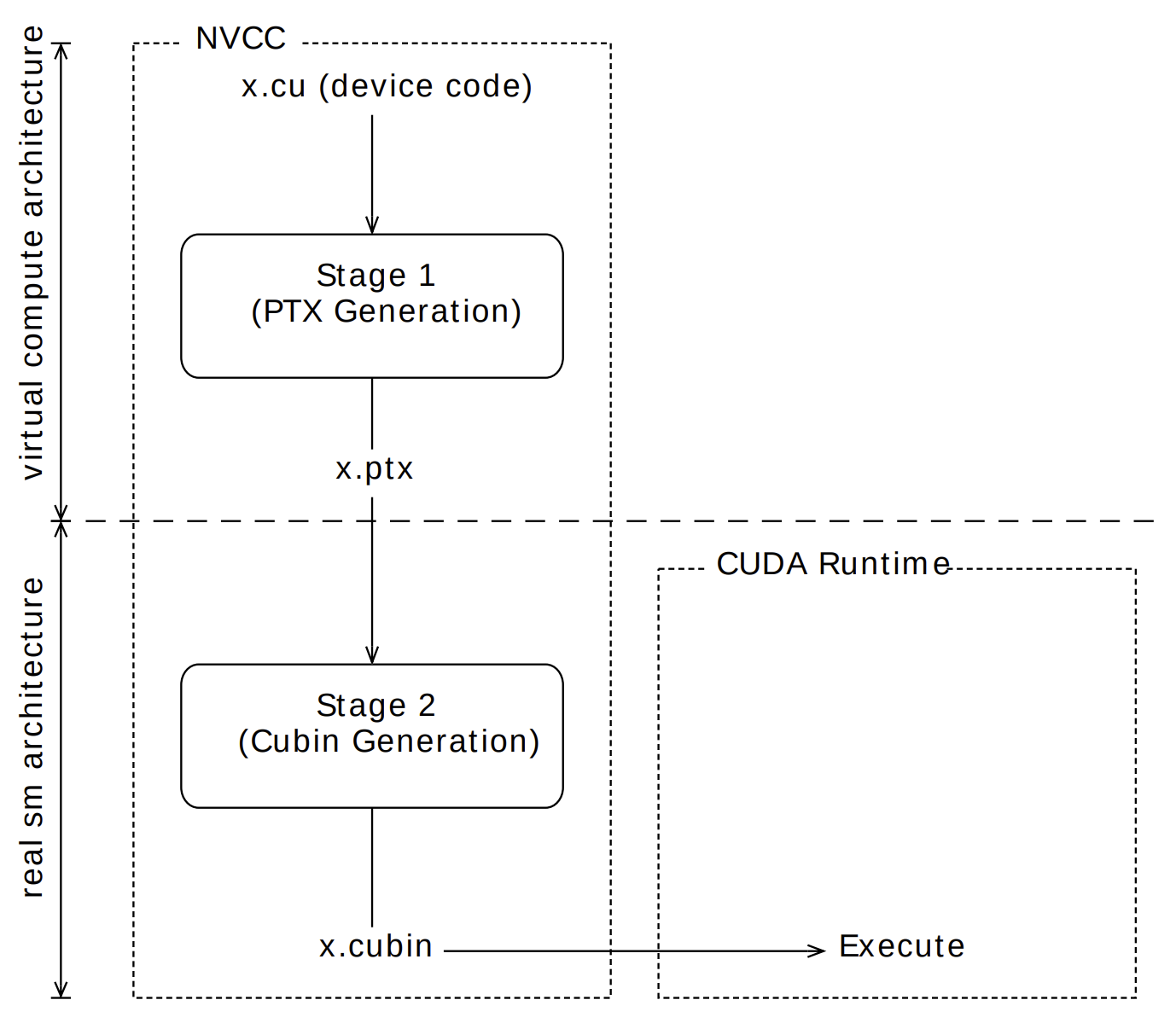

GPU compilation is performed via an intermediate representation, PTX, which can be considered as assembly for a virtual GPU architecture. Same as the assembly for CPU, PTX are always represented in text format.

The virtual GPU architecture could be specified via the --gpu-architecture argument from NVCC compiler. The virtual GPU architecture specification, such as compute_53, always starts with compute_. Usually, we would specify only one virtual GPU architecture for one CUDA program. However, NVCC compiler also supports compilation against multiple different virtual GPU architectures via --generate-code.

The PTX with a specific virtual GPU architecture is usually forward compatible. That is to say, the PTX generated with compute_53 could be used for further compilation on real GPU that are newer or equal to 53, but not older, although less optimal code might be generated because it might miss some features and optimizations from newer virtual GPU architectures.

CUBIN

CUBIN is the CUDA device code binary file for a single real GPU. The real GPU could be specified via the --gpu-code argument from NVCC compiler. The real GPU specification, such as sm_53, always starts with sm_. There could be multiple real architectures specified for one virtual GPU architecture. The CUBIN can be run on all the GPUs in the same generation, but not on the GPUs of older or newer generations. For example, the CUBIN generated with sm_52 can be run on sm_50, sm_52, and sm_53 GPUs, but not on sm_60 GPUs. The performance of running the CUBIN generated with sm_52 on sm_53 GPUs might be less than the performance of running the CUBIN generated with sm_53 on sm_53 GPUs though.

CUBIN file is also something optional during NVCC compilation. If there is no real GPU specified during NVCC compilation, or if the generation of the GPU executing the code is newer than the real GPU specified during NVCC compilation, in this case, before the CUDA program is executed, the CUDA device code would be just-in-time (JIT) compiled from the PTX by the CUDA Runtime Library against the execution device.

FATBIN

Because there could be multiple PTX and optionally multiple CUBIN files, NVCC merged these files into one CUDA fat binary file, FATBIN. This FATBIN file would ultimately be embedded into the host code binary file compiled from the C++ compiler.

In many cases, PTX are not being compiled into FATBIN, and JIT compilation will be lost. The compiled binary could only be run on the real GPUs specified during NVCC compilation and the other GPUs within the same generation.

CUDA Compilation

CUDA Compilation Trajectory

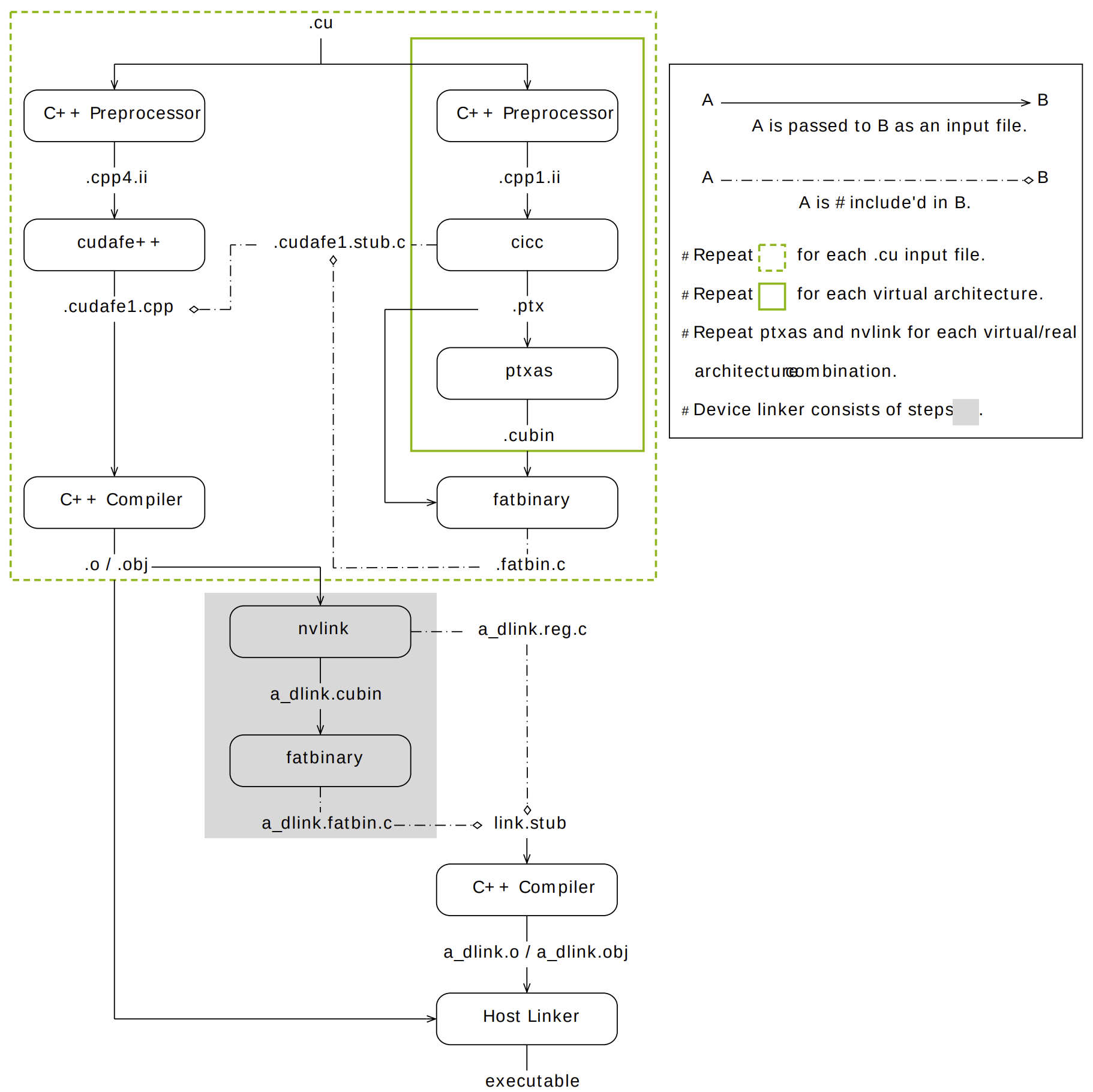

CUDA compilation works as follows: the input program is preprocessed for device compilation compilation and is compiled to CUDA binary (CUBIN) and/or PTX intermediate code, which are placed in a fat binary FATBIN. The input program is preprocessed once again for host compilation and is synthesized to embed the FATBIN and transform CUDA specific C++ extensions into standard C++ constructs. Then the C++ host compiler compiles the synthesized host code with the embedded FATBIN into a host object.

Device Code Compilation

As described in the CUDA key compilation files, the device code compilation follows the generation of PTX for specific virtual architecture followed by the generation of CUBIN for a specific real GPU.

Example

Let’s try to compile Mark Harris’s CUDA example code with different configurations.

1 | $ nvcc shared-memory.cu -o shared-memory --gpu-architecture=compute_52 |

We could see that as we added more and more real GPUs, the size of the compiled binary became larger. The only exception was when we did’t specify any real GPU, we should expect that only PTX code will be generated and the compiled binary will be the smallest. However, somehow the compiled binary is much larger than any other compiled binaries. It is currently unclear to me why this is the case.

References

CUDA Compilation