CUDA Kernel Execution Overlap

Introduction

In my previous blog post “CUDA Stream”, I have discussed about how CUDA streams helps the CUDA program achieves concurrency. At the end of the article, I also mentioned that in addition to memory transfer and kernel execution overlap, execution overlap between different kernels is also allowed. However, many CUDA programmers wondered why they have not encountered kernel execution overlap before.

In this blog post, I would like to discuss the CUDA kernel execution overlap and why we could or could not see them in practice.

CUDA Kernel Execution Overlap

Computation Resources

CUDA kernel executions can overlap if there are sufficient computation resource to parallelize multiple kernel executions.

In the following example, by changing the value of blocks_per_grid from small to large, we could see that the kernel executions from different CUDA streams changes from full-parallelization, to partial-parallelization, and finally to almost no-parallelization. This is because, when the computation resource allocated for one CUDA kernel becomes larger, the computation resource for additional CUDA kernels becomes smaller.

1 |

|

1 | $ nvcc overlap.cu -o overlap |

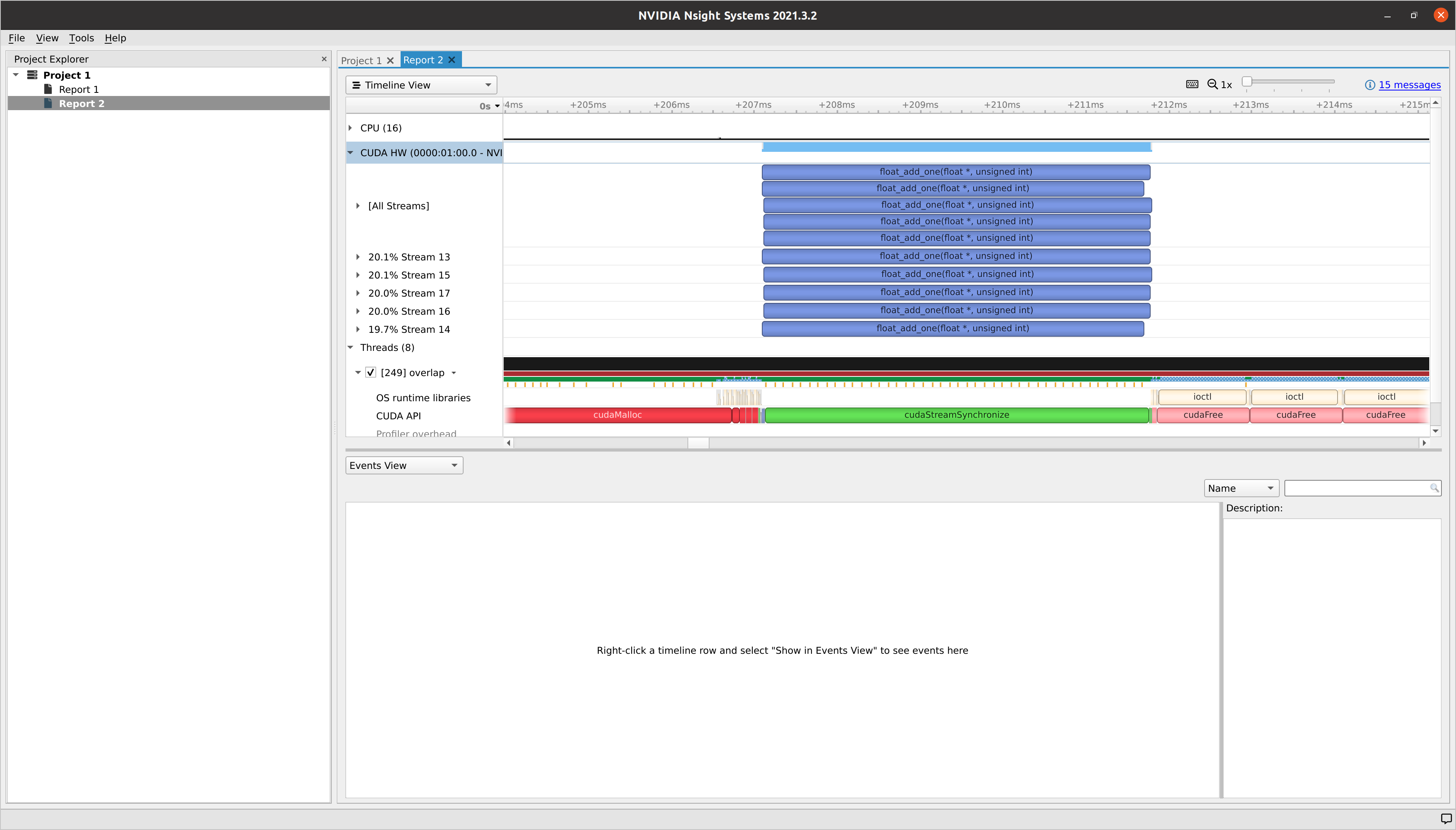

We observed full-parallelization for blocks_per_grid = 1. However, we could also see that the time spent for finishing all the kernels was long because the GPU was not fully utilized.

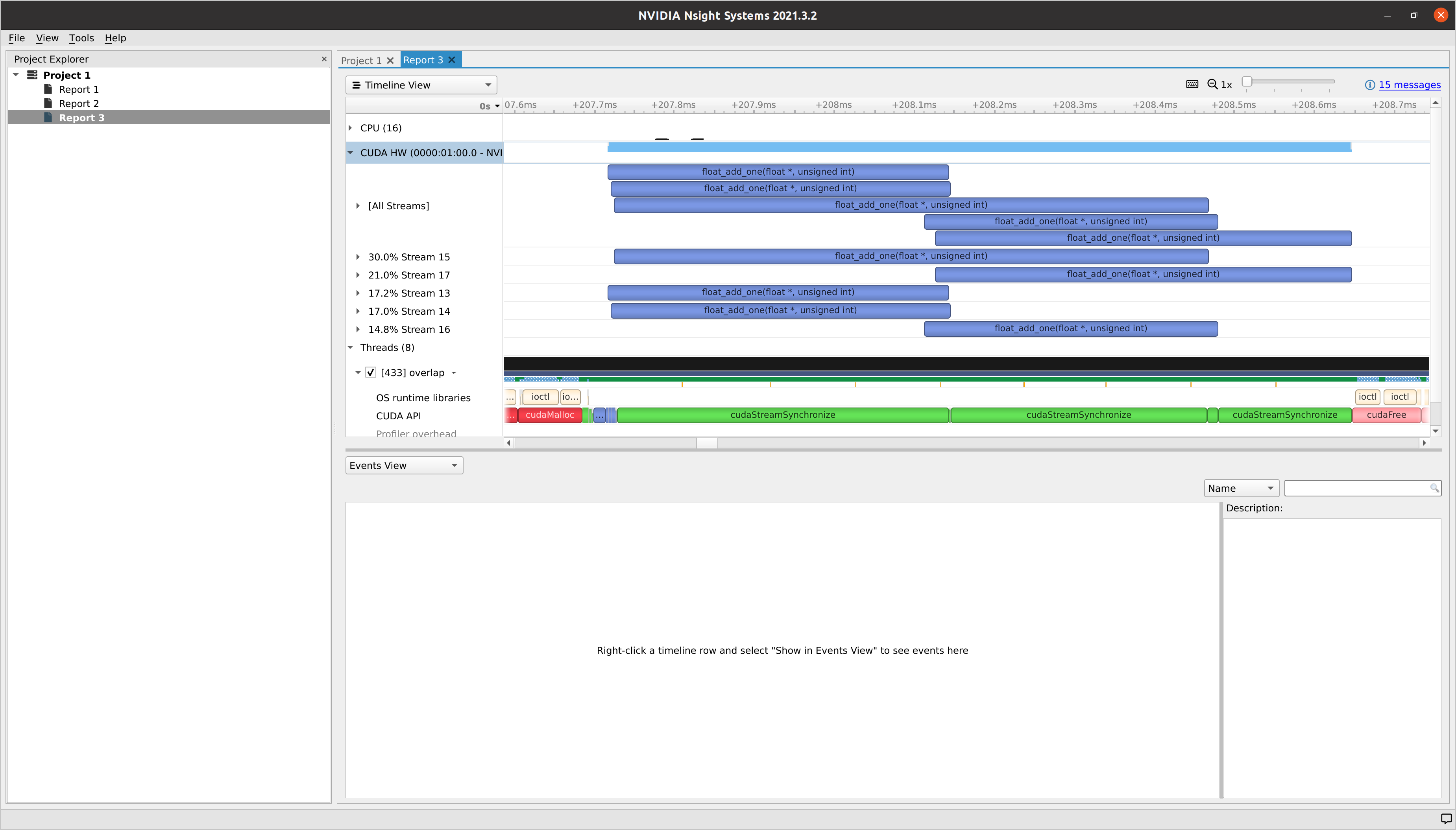

When we set blocks_per_grid = 32, only some of the kernel executions were parallelized. However, the GPU was fully utilized and the time spent for finishing all the kernels was much less compared to the blocks_per_grid = 1.

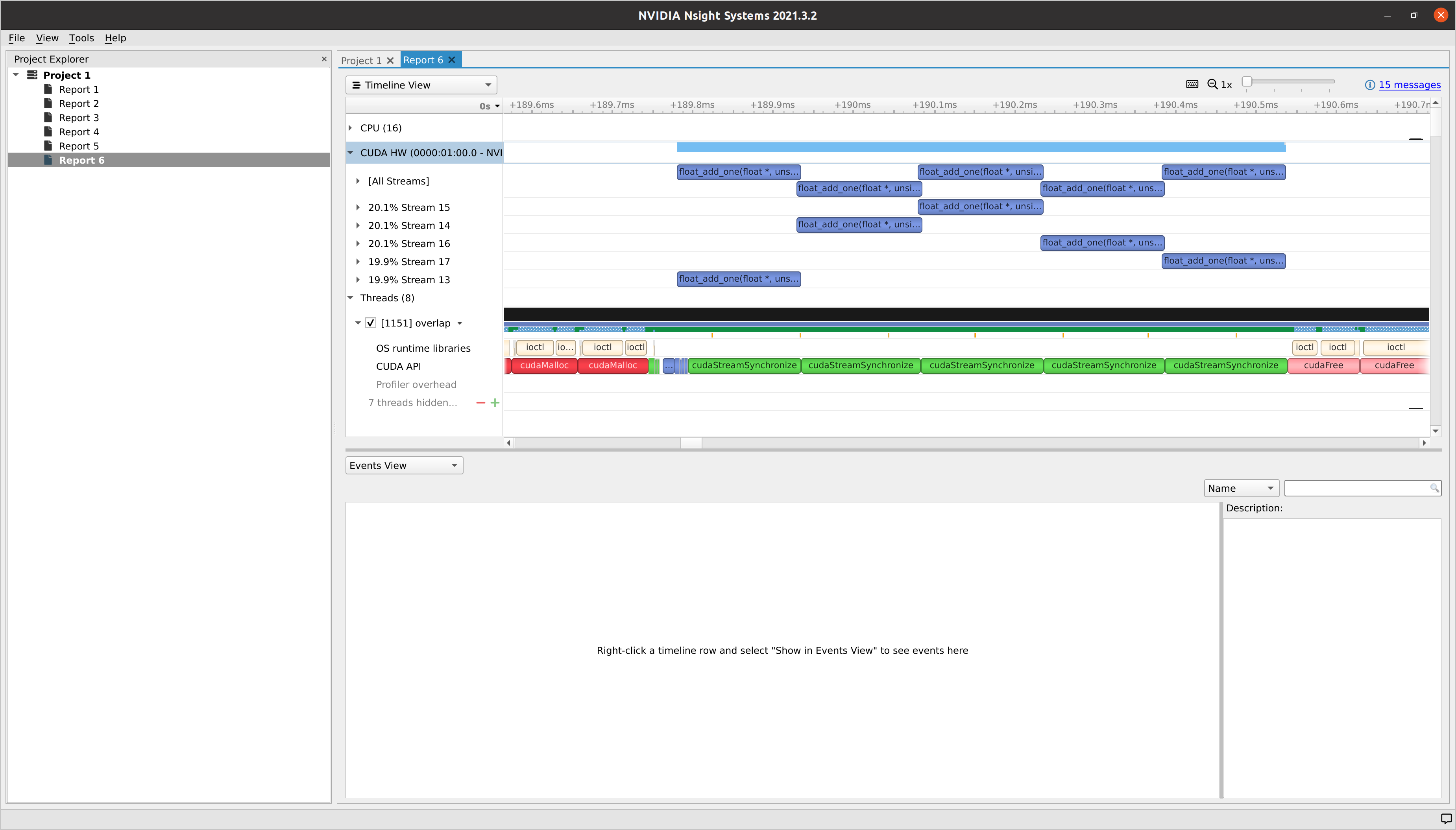

Same as blocks_per_grid = 32, when we set blocks_per_grid = 5120, there was almost no kernel executions parallelized. However, the GPU was still fully utilized and the time spent for finishing all the kernels was much less compared to the blocks_per_grid = 1.

Implicit Synchronization

It is also possible that there is no kernel execution overlap even if there are sufficient computation resources. It could be due to that there are CUDA commands issued by the host thread to the default stream between other CUDA commands from other different streams causing implicit synchronization.

In my opinion, this rarely happens in the single-threaded CUDA programs due to the way CUDA programmers usually writes CUDA programs. However, it will definitely happen for the multi-threaded CUDA programs. To overcome this situation, since CUDA 7, a per-thread default stream compile mode has been created. The user would just have to specify --default-stream per-thread in the NVCC compiler building flags without having to change the existing CUDA program to disable implicit synchronization. To see more details about how to simplify CUDA concurrency using per-thread default stream, please read Mark Harris’s blog post.

As of CUDA 11.4, the default building argument is still legacy. The user would have to manually change it to per-thread in order to use the per-thread default stream. From the CUDA 11.4 NVCC help:

1 | --default-stream {legacy|null|per-thread} (-default-stream) |

Conclusions

If there is no implicit synchronization from the default CUDA stream, partial or no CUDA kernel execution parallelization usually indicate high GPU utilization, and full CUDA kernel execution parallelization usually indicate GPU might have not been fully utilized.

If the no CUDA kernel execution overlap was due to the implicit synchronization from the default CUDA stream, we should probably think of disabling it by enabling the per-thread default stream.

References

CUDA Kernel Execution Overlap

https://leimao.github.io/blog/CUDA-Kernel-Execution-Overlap/