CUDA Shared Memory Swizzling

Introduction

When we write CUDA kernels that use shared memory, we have to be careful about shared memory bank conflicts. Having severe shared memory bank conflicts can introduce a significant performance penalty.

One simple way to deal with shared memory bank conflicts is to use padding. However, padding can waste shared memory and can have other drawbacks.

In this blog post, I would like to discuss how to deal with shared memory bank conflicts using swizzling. Swizzling is a more complicated technique that can be used to avoid shared memory bank conflicts without wasting shared memory.

CUDA Shared Memory Swizzling

Swizzling Example

When we use CUDA shared memory to cache data without using padding, it’s very common that either reading from or writing to shared memory by a warp can cause shared memory bank conflicts. Swizzling is a technique that rearranges the mapping of the shared memory index to avoid shared memory bank conflicts. Matrix transpose is a perfect example that can have shared memory bank conflicts if the implementation does not use padding or swizzling.

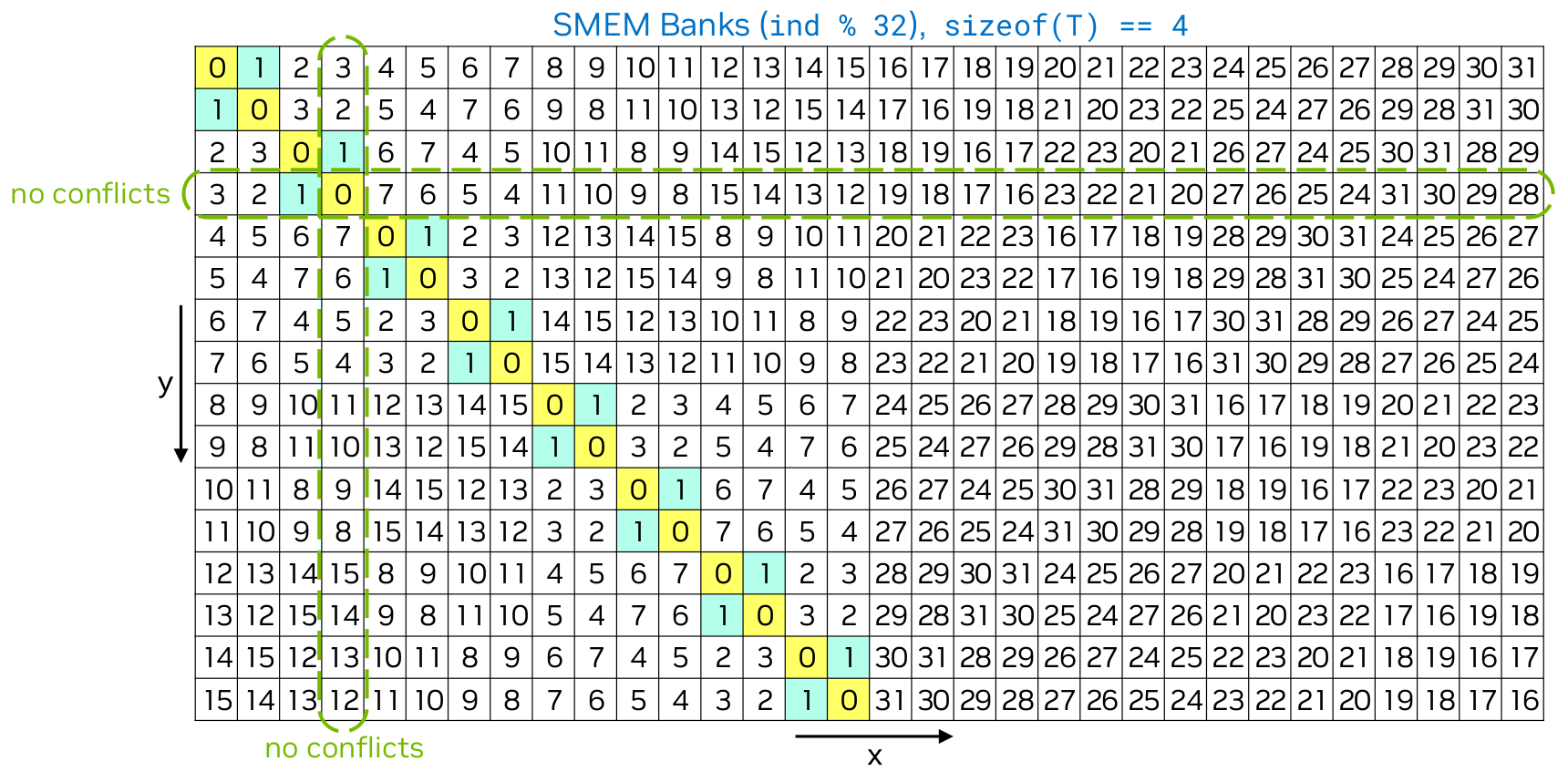

In the above example, the shared memory is a 2D array of float with size 32 × 16. In terms of matrix transpose, each warp reads a row of 32 values from the global memory and writes them to the shared memory with swizzling. There will be no shared memory bank conflicts when writing to the shared memory. To perform matrix transpose, each wrap reads two swizzled “columns” of 32 values from the shared memory and writes them to the global memory. For example, the swizzled column 0 and 1 are colored in yellow and cyan, respectively. In this way, there will be only one shared memory bank conflict when reading from the shared memory. Without using swizzling, there will be 16 (2-way) shared memory bank conflicts when reading from the shared memory. Of course, obviously, if the shared memory is a 2D array of float with size 32 × 32, there will be no shared memory bank conflicts when writing to the shared memory and reading from the shared memory.

Swizzling Formula

Given an array of T array[][NX] on shared memory, we define NX × sizeof(T) == SWIZZLE_SIZE. The allowed values of SWIZZLE_SIZE are powers of 2 that is larger than or equal to 32, such as 32, 64, 128, 256, …, etc.

Given the index [y][x] in T array[][NX], we can compute the swizzled index x_swz as follows:

Compute the index of the

TC-byte chunk withinSWIZZLE_SIZE-byte segment:i_chunk = (y × NX + x) × sizeof(T) / sizeof(TC)y_chunk = i / (SWIZZLE_SIZE / sizeof(TC))x_chunk = i % (SWIZZLE_SIZE / sizeof(TC))Compute the swizzled index of

TC-byte chunk using XOR operation:x_chunk_swz = y_chunk ^ x_chunkCompute swizzled index:

x_swz = x_chunk_swz × sizeof(TC) / sizeof(T) % NX + x % (sizeof(TC) / sizeof(T))

Swizzling Properties

This swizzling formula has the following properties:

- The index before and after swizzling must be one to one mapped.

- $\text{NX}$ must be a power of 2.

- Given any $x$ and any $\{y, y+1, y+2, \cdots, y+31\}$, the number of unique swizzled index $x_{\text{swz}}$ should be maximized.

The property 1 ensures that there will be no data loss during swizzling. The property 2 ensures that the index before and after swizzling will be one to one mapped.

Here I am going to show some informal mathematical proofs for the properties from the swizzling formula.

Proof

We will first prove the property 1.

$$

\begin{align}

x_{\text{chunk}}

&= i_{\text{chunk}} \% (\text{SWIZZLE_SIZE} / \text{sizeof}(\text{TC})) \\

&= \left(\left(y × \text{NX} + x\right) × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})\right) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= \left(y × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})\right) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= \left(y × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) + x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \right) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= \left(x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \right) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= \left( x \% \text{NX} \right) × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \\

&= x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \\

\end{align}

$$

It seems that we have derived another equivalent formula for $x_{\text{chunk}}$. Note that $\text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})$ is a bit (right) shifting operation when $\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})$ is a power of 2.

$$

\begin{align}

y_{\text{chunk}}

&= i_{\text{chunk}} / (\text{SWIZZLE_SIZE} / \text{sizeof}(\text{TC})) \\

&= \left(\left(y × \text{NX} + x\right) × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})\right) / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= \left(y × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})\right) / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= y × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) + x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \\

&= y + x / \text{NX} \\

&= y \\

\end{align}

$$

It seems that we have also derived another equivalent formula for $y_{\text{chunk}}$.

$$

\begin{align}

x_{\text{chunk_swz}}

&= y_{\text{chunk}} \oplus x_{\text{chunk}} \\

&= y \oplus \left( x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \right) \\

&= y / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + \left( y \% (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) \right) \oplus \left( x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \right) \\

&= y / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + \left( \left( y \% \text{NX} \right) × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \right) \oplus \left( x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \right) \\

&= y / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + \left( y \% \text{NX} \right) \oplus x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \\

\end{align}

$$

Note that $\oplus$ is the bitwise XOR operation. If either $y_{\text{chunk}}$ or $x_{\text{chunk}}$ is a constant, the mapping is a one to one mapping.

$$

\begin{align}

x_{\text{swz}}

&= x_{\text{chunk_swz}} × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) \% \text{NX} + x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})) \\

\end{align}

$$

Here the proof becomes a little bit informal.

Because a consecutive of $\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})$ $x$ values will be mapped to one unique trunk index $x_{\text{chunk}}$, the mapping between $x_{\text{chunk}}$ and $x_{\text{chunk_swz}}$ is a one to one mapping, one $x_{\text{chunk_swz}}$ value will map to one unique $x_{\text{chunk_swz}} × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) \% \text{NX}$ value. To create the one to one mapping between the swizzled index $x_{\text{swz}}$ and the original index $x$, the offset $x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}))$ is added. Therefore, the index before and after swizzling must be one to one mapped.

The property 2 is trivial to show.

The property 3 might be somewhat easier to see given the following expression for $x_{\text{swz}}$.

$$

\begin{align}

x_{\text{swz}}

&= x_{\text{chunk_swz}} × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) \% \text{NX} + x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})) \\

&= \left( y / (\text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC})) × \text{NX} × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) + \left( y \% \text{NX} \right) \oplus x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) \right) × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) \% \text{NX} + x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})) \\

&= \left( y \% \text{NX} \right) \oplus x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) \% \text{NX} + x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})) \\

&= \left( y \% \text{NX} \right) \oplus x × \text{sizeof}(\text{T}) / \text{sizeof}(\text{TC}) × \text{sizeof}(\text{TC}) / \text{sizeof}(\text{T}) + x \% (\text{sizeof}(\text{TC}) / \text{sizeof}(\text{T})) \\

\end{align}

$$

Given any $x$ and any $\{y, y+1, y+2, \cdots, y+\text{NX}-1\}$, the number of unique swizzled index $x_{\text{swz}}$ is $\text{NX}$ which is maximized.

Examples

Matrix Transpose

In this example, we implemented matrix transpose CUDA kernels using shared memory in three different ways:

- Transpose with shared memory bank conflict.

- Transpose without shared memory bank conflict via padding.

- Transpose without shared memory bank conflict via swizzling.

1 |

|

The program was built and performed on a platform that has an Intel i9-9900K CPU and an NVIDIA RTX 3090 GPU.

1 | $ nvcc transpose.cu -o transpose |

We could see that the transpose kernel with shared memory bank conflict has the highest latency, while the transpose kernel without shared memory bank conflict via padding and swizzling have the same latency and run 20% faster than the kernel with shared memory bank conflict in this case.

Note that this implementation achieves ~65% of the peak memory bandwidth of an RTX 3090 GPU. The performance can be further improved significantly using vectorized memory access if the implementation assumes the matrix is always padded (and usually allocated using cudaMallocPitch) so that each row will continue to meet the coalescing requirement.

Swizzling vs Padding

Swizzling and padding are two common techniques to deal with shared memory bank conflicts.

The advantage of swizzling is that it does not waste shared memory space. The disadvantage of swizzling is that it is more complicated to implement and understand because the index mapping is not linear.

The advantage of padding is that it is simple to implement and understand. The disadvantage of padding is that it wastes shared memory space and can break the address alignment of the data if the padding size is not selected carefully especially when we access the data via large trunks using reinterpret_cast and cause undefined behaviors. This usually happens when vectorized memory access is performed on 2D padded arrays which accidentally breaks the alignment of the data.

References

CUDA Shared Memory Swizzling