CUDA Stream

Introduction

CUDA kernels are powerful because it could help us solve a divisible problem asynchronously by taking advantage of the large collections of CUDA cores on GPU. Here, we use the concept of “threads” for executing the kernels asynchronously. In practice, we have two additional steps, in addition to kernel executions, to solve the entire problem. These two steps are memory copy from host to device and memory copy from device to host. Intuitively, we would copy the input memory from host to device first, then execute the kernel to compute the output, and finally copy the output memory from device back to host. However, this serial approach might not be optimal because we may further improve the performance by doing memory copy from host to device, kernel executions, and memory copy from device to host, concurrently. To do this, we would need to understand the concept of “streams”.

In this blog post, I am going to introduce the concept of CUDA stream and further present a model to improve CUDA program performance by overlapping the memory copy and kernel executions.

Example Models

Example

The simplest CUDA program consists of three steps, including copying the memory from host to device, kernel execution, and copy the memory from device to host. In our particular example, we have the following facts or assumptions:

- The memory copy (host to device, device to host) time is linearly dependent on the size of the memory for copy.

- The GPU would never be fully utilized.

- The kernel execution could be divided into $N$ smaller kernel executions and each smaller kernel execution would only take $1/N$ of the time the original kernel execution takes.

- The memory copy time from host to device, kernel execution, and memory copy time from device to host are the same.

- Each CUDA engine executes commands or kernels in order.

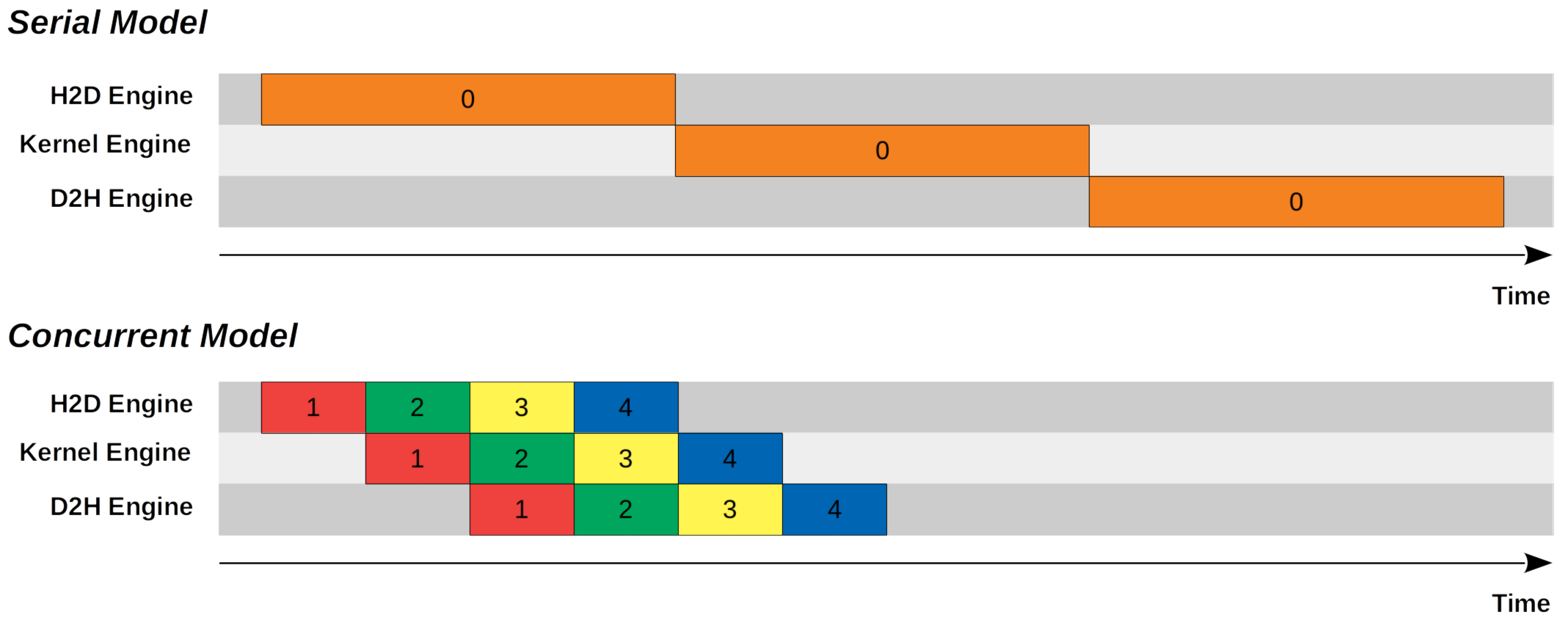

We could come up with two models, including a serial model and a concurrent model, to implement the program.

Serial Model

In the serial model, we first copy the input memory from host to device first, then execute the kernel to compute the output, and finally copy the output memory from device back to host.

Concurrent Model

In the concurrent model, we make memory copy from host to device, kernel executions, and memory copy from device to host, asynchronous. We divided the memory into $N$ trunks. In our particular example above, we set $N = 4$. After finishing copying the first trunk from host to device, we launch the smaller kernel execution to process the first trunk. In the meantime, the host to device (H2D) engine becomes available and it proceeds to copy the second trunk from host to device. Once the first trunk has been processed by the kernel, the output memory would be copied from device to host using the device to host engine (D2H) engine. In the meantime, the host to device (H2D) engine and the kernel engine becomes available and they proceed to copy the third trunk from host to device and process for the second trunk respectively.

From the figure above, we could see that the concurrent model would only take half of the time the serial model would take.

The question then becomes how do we write a CUDA program such that the commands for each of the trunks are executed in order, and different trunks could be executed concurrently. The answer is to use CUDA stream.

CUDA Stream

Definitions

According to the CUDA programming guide, a stream is a sequence of commands (possibly issued by different host threads) that execute in order. Different streams, on the other hand, may execute their commands out of order with respect to one another or concurrently.

This is exactly what we want to implement the concurrent model for our CUDA programs.

Default Stream

According to the CUDA programming guide, kernel launches, host to device memory copy, and device to host memory copy that does not specify any stream parameter, or equivalently that set the stream parameter to zero, are issued to the default stream. It is also called null stream or stream 0. Essentially when we call cudaMemcpy or we do not specify stream when we call cudaMemcpyAsync, we are using the default stream.

With the new features from new CUDA versions, the behavior of the default stream is now dependent on the compilation flag. In the legacy mode, default stream is a synchronizing stream with respect to operations on the device: no operation in the default stream will begin until all previously issued operations in any stream on the device have completed, and an operation in the default stream must complete before any other operation (in any stream on the device) will begin. So it looks like in the legacy mode the default stream is not friendly to concurrent model and we should use non-default streams instead.

Non-Default Stream

The non-default CUDA streams have to be declared, created and eventually destroyed.

1 | cudaStream_t stream[nStreams]; |

To implement the concurrent model, instead of calling cudaMemcpy, we call cudaMemcpyAsync and launch kernel with the stream specified so that they will return to the host thread immediately after call.

1 | for (int i = 0; i < nStreams; i ++) |

For a more concrete example, please check Mark Harris’s example implementation.

Example Models With CUDA Streams

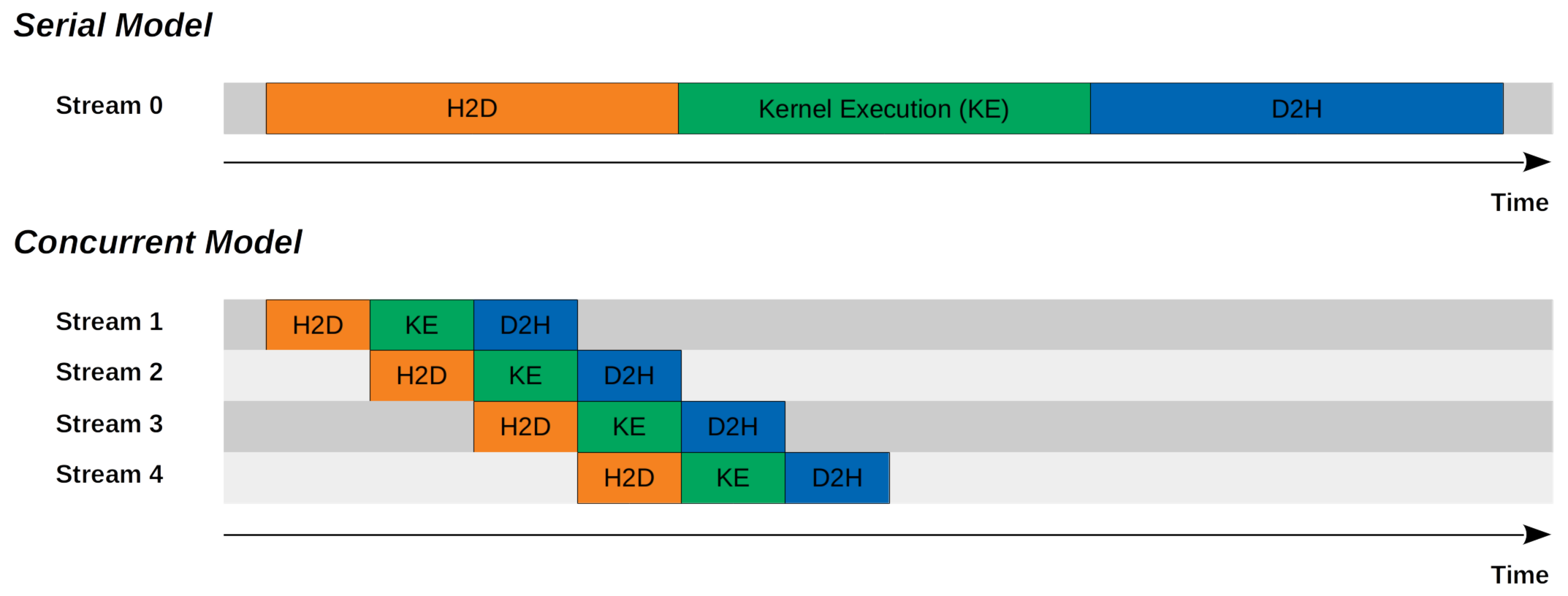

We could also view the serial model and the concurrent model from the stream’s perspective.

Serial Model

We only have one (default) CUDA stream. All the commands were executed in order.

Concurrent Model

We have $N$ (non-default) CUDA streams. All the commands in the same stream were executed in order. Different streams have overlap.

Notes

Kernel Execution Concurrency

Kernel executions on different CUDA streams could run simultaneously, provided that the computation resources are sufficient. The model diagrams presented above showed an idea model where the time spent for memory copy from host to device, kernel execution, and memory copy time from device to host are the same, so the optimal computation resources could be used and the lowest latency could be achieved. The kernel executions on different CUDA streams looks exclusive, but it is not true. In practice, the kernel executions on different CUDA streams could have overlaps. Some good examples could be found from my other post “CUDA Kernel Execution Overlap”.

References

CUDA Stream