Page-Locked Host Memory for Data Transfer

Introduction

Paged-locked host memory plays an critical role in the data transfer between host and CUDA device. In this blog post, I would like to discuss the paged-locked memory, the data transfer model, the motivation of using paged-locked memory in the data transfer model, and the optimization of data transfer using paged-locked memory.

Prerequisites

Paged Memory

Paged memory, sometimes referred as memory paging, is a memory management scheme by which a computer stores and retrieves data from secondary storage, which is usually the swap on the hard drive, for use in main memory. The secondary storage is also called virtual memory. With paged memory, each program has its own logical memory, which can be broken into consecutive pages. The program will read and write data to the pages, which are mapped to the physical memory via page table. So a program’s memory might be consecutive on its own logical memory but segmented on physical memory.

Paged memory utilizes the main memory better than segmented memory, sometimes referred as memory segmentation. So in most operating systems, the user’s programs are using paged memory. However, even if the main memory is utilized better with paged memory, its size is limited. When the main memory does not have enough space and there are more data writing to the memory, some pages will be moved to the hard drive. This process is called page out. Conversely, the process of moving pages from hard drive back to memory is called page in.

A more comprehensive introduction to segmented memory, paged memory, and virtual memory could be viewed on YouTube.

Pageable Memory and Page-Locked Memory

With paged memory, the specific memory, which is allowed to be paged in or paged out, is called pageable memory. Conversely, the specific memory, which is not allowed to be paged in or paged out, is called page-locked memory or pinned memory.

Page-locked memory will not communicate with hard drive. Therefore, the efficiency of reading and writing in page-locked memory is more guaranteed.

Data Transfer Between Host and CUDA Device

Data Transfer Model

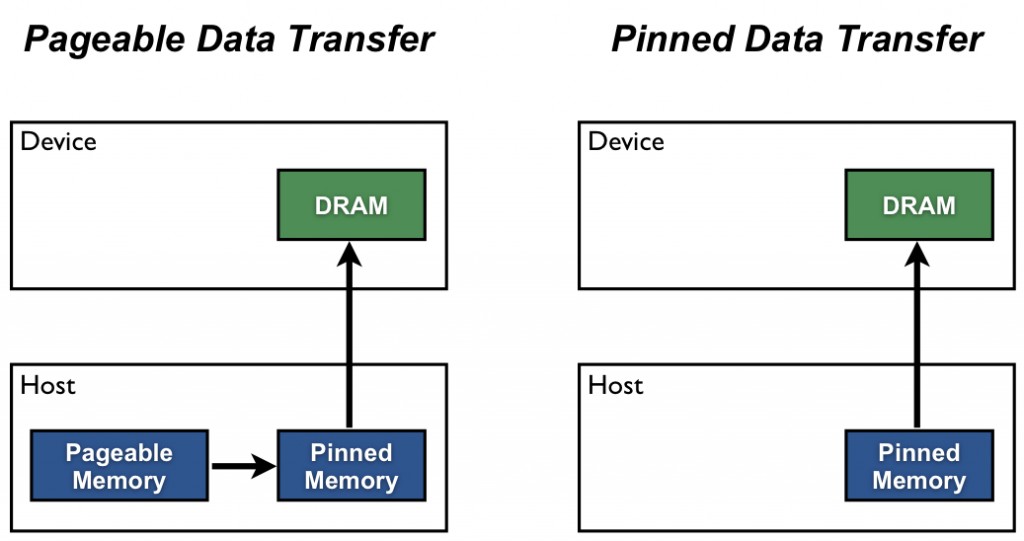

The data transfer between host and CUDA device requires page-locked memory on host. If the data was on the pageable memory on host, during transferring the data from host to device, the data will be implicitly transferred from the pageable host memory to a temporary page-locked host memory, and then the data will be transferred from the page-locked host memory to device memory.

This introduces additional data transfer overhead and might affect the computer program performance significantly in some scenarios.

Motivation

So the question is why the data transfer between host and CUDA device requires page-locked memory on host. I think the major motivation is the guarantee of data transfer efficiency.

Optimization

Since the data transfer between host and CUDA device have to use page-locked memory anyway, to optimize the data transfer, instead of allocating pageable memory for storing data, we could allocate page-locked memory directly for storing data.

One caveat is that using much page-locked memory manually might cause operating system performance problems. Using page-locked memory manually means that the user is responsible for allocating and freeing the page-locked memory. If the page-locked memory is not freed timely by the user, there will be less available memory for new data and new applications on the physical memory. The operating system might become unstable because of this. If the page-locked memory is only used temporarily during the data transfer between pageable memory and CUDA memory, it will always be freed in a timely fashion at the cost of addition data transfer overhead between pageable memory and page-locked memory.

So we are encouraged to use page-locked memory, but we should not abuse it. Typically, if we know the data will be transferred multiple times between host and CUDA device, it might be a good idea to put the data on page-locked memory to avoid the unnecessary overhead.

Using Page-Locked Memory in CUDA

Mark Harris has an excellent blog post on optimizing the data transfer for CUDA and C/C++ which covers the topic of using page-locked memory in CUDA programs.

I tested Mark’s script on my NVIDIA RTX 2080TI.

1 | $ wget https://raw.githubusercontent.com/NVIDIA-developer-blog/code-samples/master/series/cuda-cpp/optimize-data-transfers/bandwidthtest.cu |

Similar to what Mark has found in his blog post, the data transfer using pageable memory is not much slower than the data transfer using page-locked memory, presumably because my powerful CPU Intel i9-9900K transfers the data from the pageable memory to the temporary page-locked memory very fast.

Miscellaneous

PyTorch allows memory pinning for data buffers, and the pinned memory implementation is available for the DataLoader.

References

Page-Locked Host Memory for Data Transfer

https://leimao.github.io/blog/Page-Locked-Host-Memory-Data-Transfer/