Nsight Systems In Docker

Introduction

NVIDIA Nsight Systems is a low overhead performance analysis tool designed to provide developers need to optimize their software. Unbiased activity data is visualized within the tool to help users investigate bottlenecks, avoid inferring false-positives, and pursue optimizations with higher probability of performance gains.

In this blog post, I would like to discuss how to install and use Nsight Systems in Docker container so that we could use it anywhere that has Docker installed.

Nsight Systems

Build Docker Image

It is possible to install Nsight Systems inside a Docker image and used it anywhere. The Dockerfile for building Nsight Systems is as follows.

1 | FROM nvcr.io/nvidia/cuda:12.0.1-devel-ubuntu22.04 |

To build the Docker image, please run the following command.

1 | $ docker build -f nsight-systems.Dockerfile --no-cache --tag nsight-systems:2023.4 . |

Upload Docker Image

To upload the Docker image, please run the following command.

1 | $ docker tag nsight-systems:2023.4 leimao/nsight-systems:2023.4 |

Pull Docker Image

To pull the Docker image, please run the following command.

1 | $ docker pull leimao/nsight-systems:2023.4 |

Run Docker Container

To run the Docker container, please run the following command.

1 | $ xhost + |

Run Nsight Systems

To run Nsight Systems with GUI, please run the following command.

1 | $ nsys-ui |

We could now profile the applications from the Docker container, from the Docker local host machine via Docker mount, and from the remote host such as a remote workstation or an embedding device.

Examples

Pageable Memory VS Page-Locked Memory

To overlap data transfer and kernel launch with CUDA stream, we will have to use page-locked (pinned) host memory. Otherwise, with pageable memory, no data transfer and kernel launch overlap will happen.

I prepared two examples trying to use CUDA stream to overlap data transfer and kernel launch. One uses page-locked host memory and the other one uses pageable host memory. The two examples are available on GitHub.

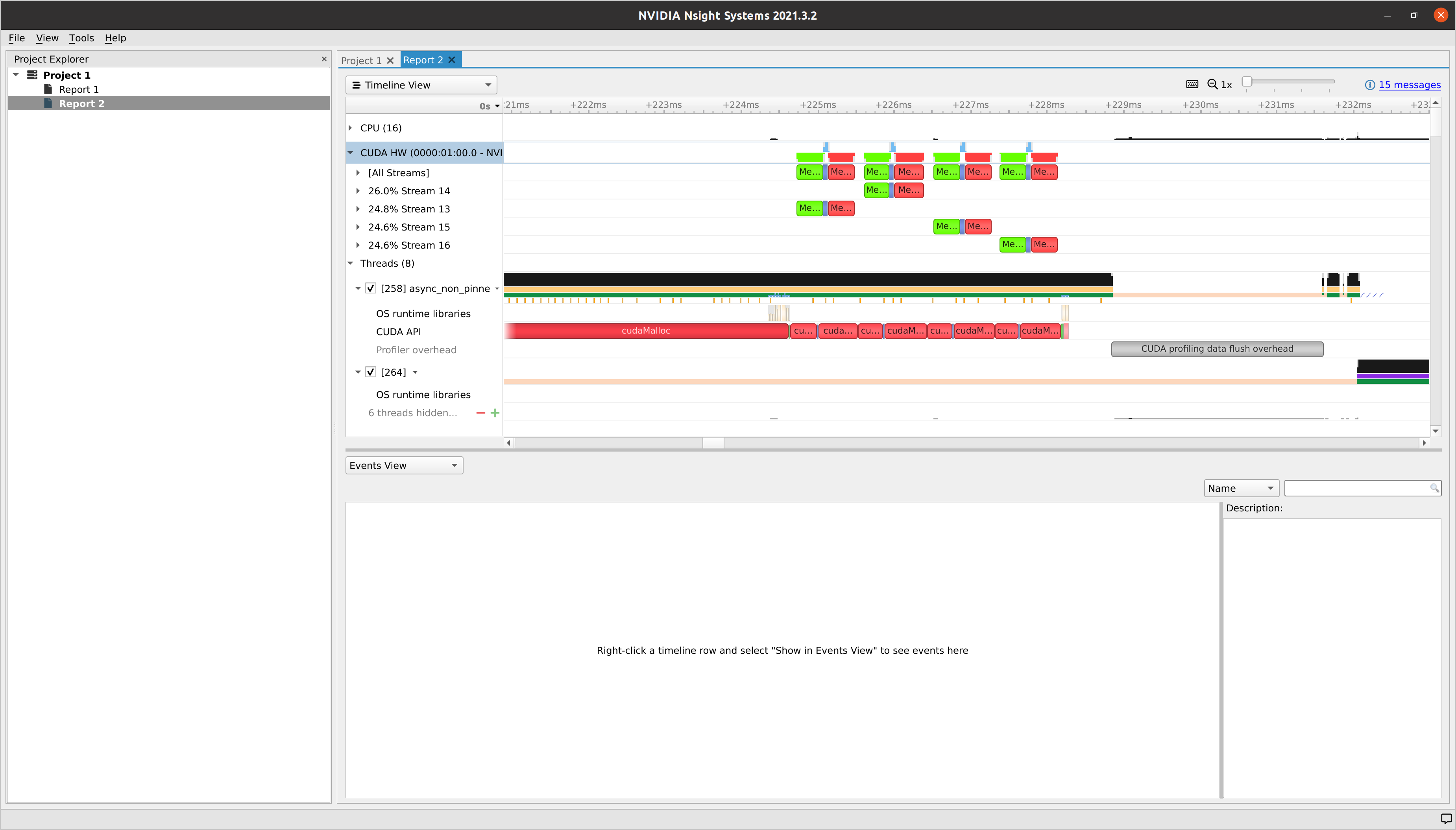

Using Nsight Systems to profile the two implementations, we could clearly see that there are no data transfer and kernel launch overlap from the implementation that does not use page-locked memory. Based on this, we realized that we made a mistake or there could be optimization opportunities.

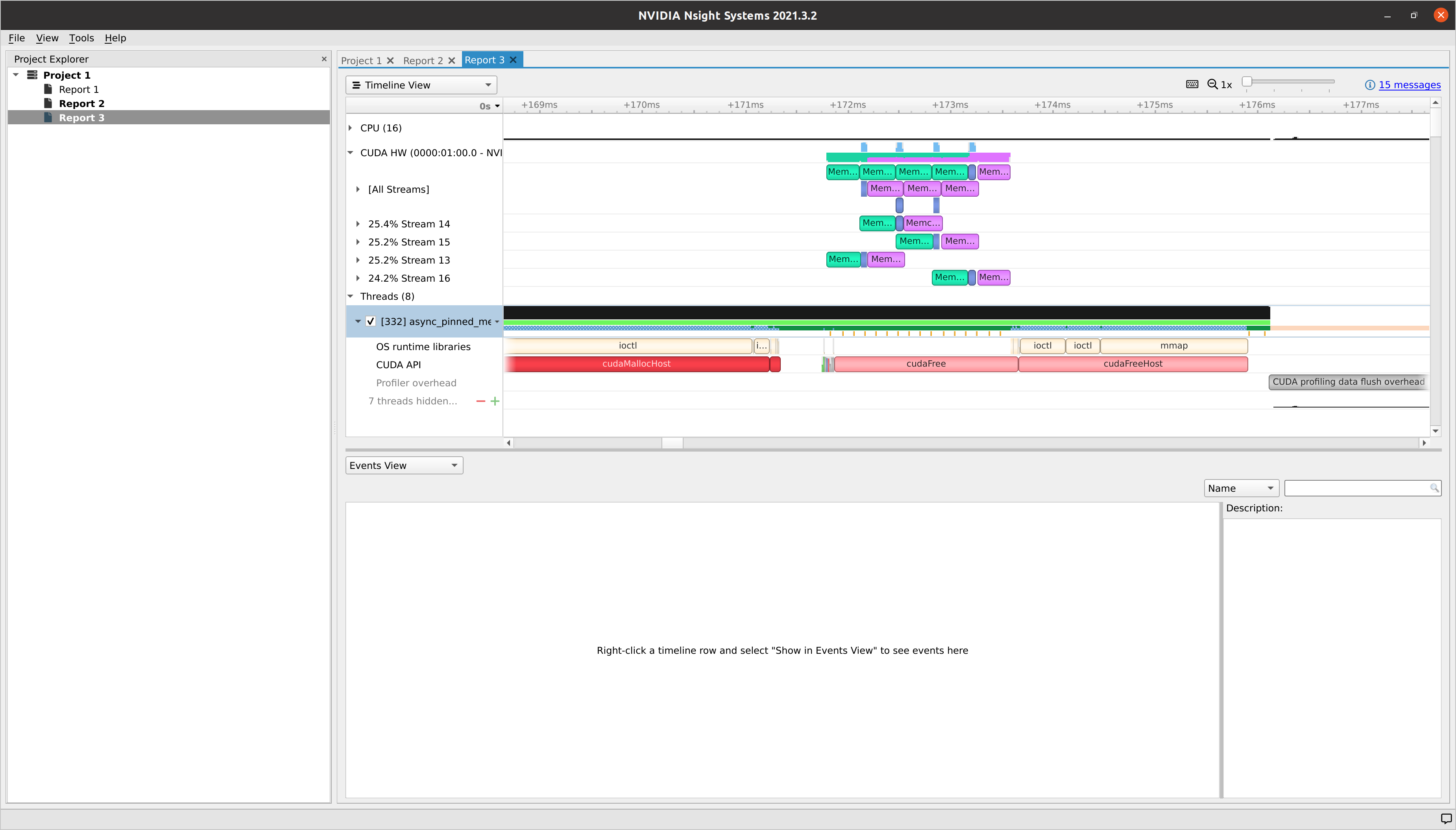

By switching to page-locked memory, we could see data transfer and kernel launch overlap.

GitHub

All the Dockerfiles and examples are available on GitHub.

Miscellaneous

NVIDIA Nsight Compute is an interactive specialized kernel profiler for CUDA applications. So for optimizing CUDA kernel implementation, we should use Nsight Compute instead of Nsight Systems. Nsight Compute could be installed and used in Docker container similarly as Nsight Systems.

References

Nsight Systems In Docker